If you live in an urban area, you’ve likely noticed how transportation is shifting toward micromobility. E-scooters, e-bikes, shared bicycles, and other lightweight vehicles now move through streets once dominated by cars. This shift is accelerating, creating both technical challenges and opportunities for AI/ML engineers and urban traffic regulators.

Computer vision systems designed for traditional vehicles and pedestrians are no longer sufficient. Micromobility introduces new object geometries, motion patterns, and safety risks. If you’re building perception models for smart cities, ADAS, fleet analytics, or infrastructure monitoring, your data annotation strategy must evolve alongside these changes.

In this article, we explore:

- What micromobility is

- What micromobility annotation involves

- Computer vision use cases

- Key annotation challenges

- Best practices

- Future trends

Let’s dive in.

What is Micromobility?

Micromobility refers to lightweight, low-speed transportation modes used for short urban trips. These include electric scooters, e-bikes, shared bicycles, electric skateboards, and other compact personal vehicles. In dense cities, they often replace short car trips.

This is not a marginal trend. According to McKinsey, the global micromobility market is projected to exceed $340 billion by 2030.

Cities worldwide are redesigning infrastructure around these vehicles. Dedicated bike lanes, scooter-sharing systems, and mixed-traffic corridors are becoming standard due to multiple factors. This shift fundamentally changes the visual landscape of urban environments and introduces new complexity for perception systems.

Most computer vision systems developed a decade ago were trained on datasets dominated by cars, buses, trucks, and pedestrians. Today, those systems must also detect small, fast-moving, highly variable micromobility vehicles operating in dense and unpredictable traffic scenarios.

As adoption grows, micromobility is no longer a niche category. It is a core component of urban mobility ecosystems. Perception pipelines must adapt accordingly.

What Is Micromobility Annotation?

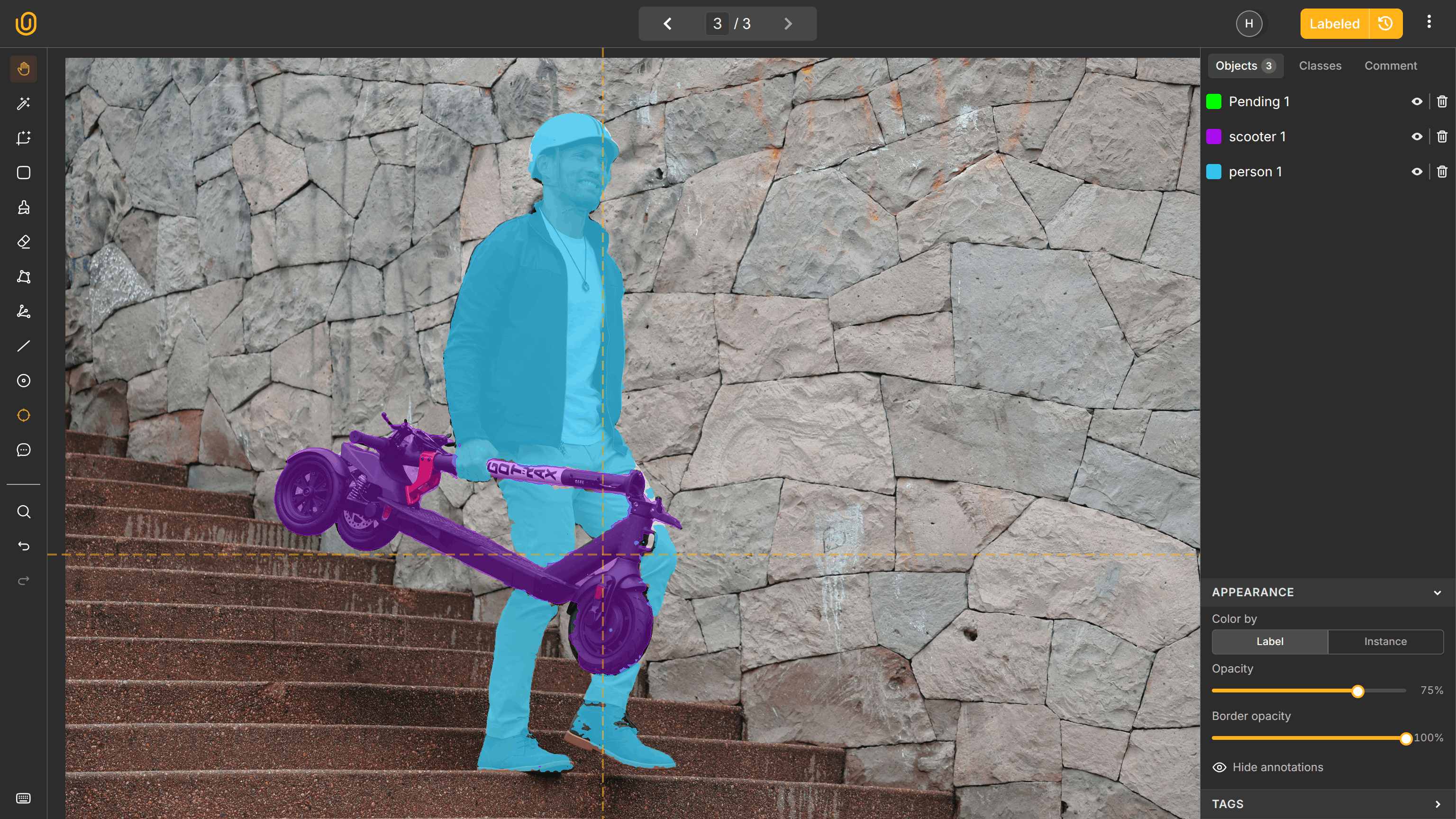

Micromobility annotation is the structured labeling of images, video frames, or multimodal sensor data to represent micromobility vehicles and their behaviors in urban environments. It goes far beyond drawing simple 2D bounding boxes around scooters.

Effective micromobility annotation captures:

- Vehicle class, such as e-scooter, e-bike, cargo bike, or skateboard

- Rider presence and pose

- Motion state, including moving, parked, or fallen

- Lane or path context

- Interaction with other traffic participants

These labels enable training object detection, tracking, segmentation, and behavior prediction models tailored to micromobility-heavy scenes.

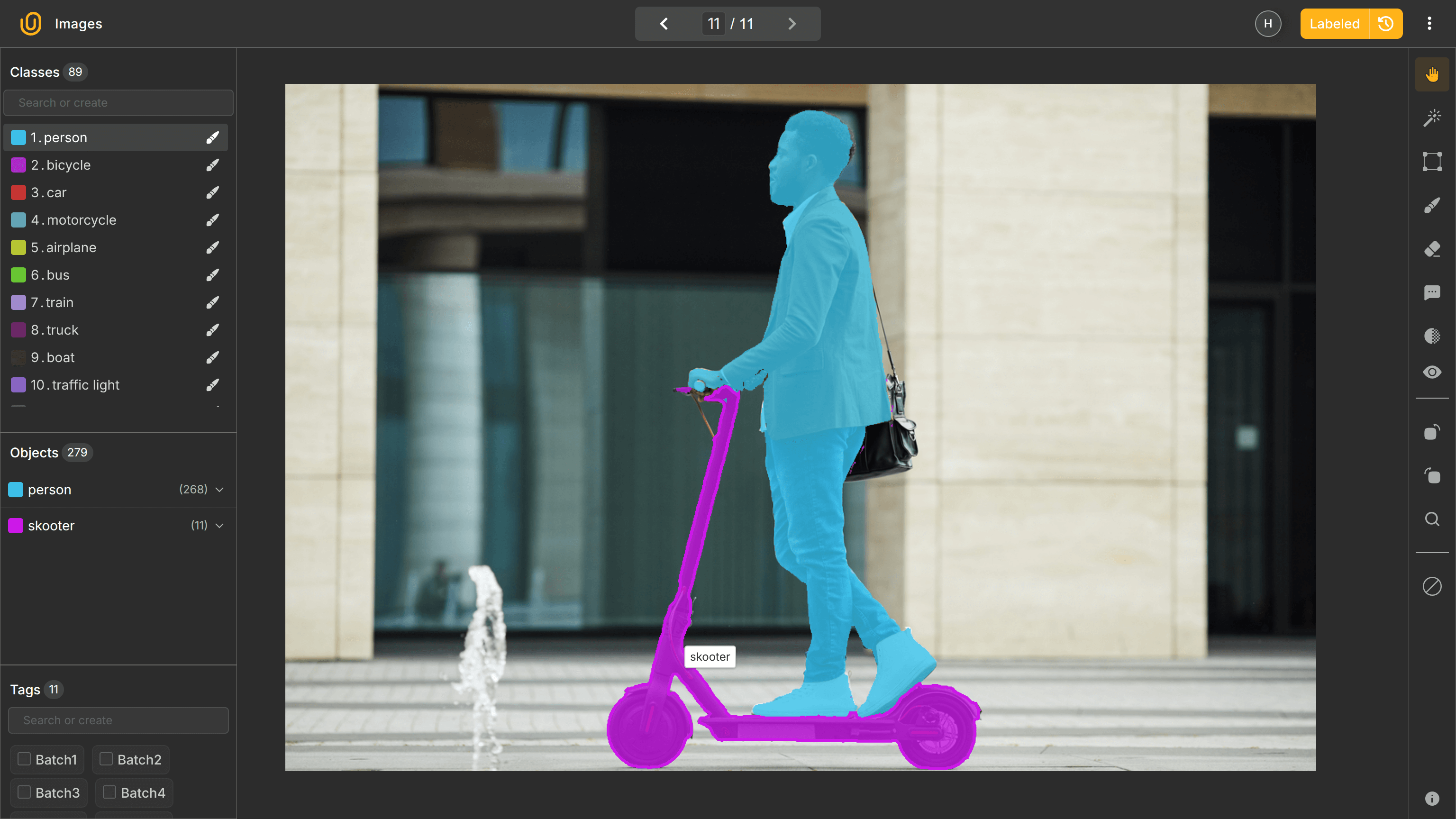

Unlike traditional vehicle annotation, micromobility labeling often requires higher granularity. Separating the rider from the vehicle improves behavior modeling. Segmenting wheels or handlebars supports pose estimation and fine-grained geometry understanding. Tracking across video frames is essential because these vehicles accelerate and maneuver quickly.

Thus, high-quality annotation becomes the foundation for reliable perception models.

Annotation Types for Micromobility Vision

Micromobility perception relies on multiple data and annotation types, depending on the modeling objective.

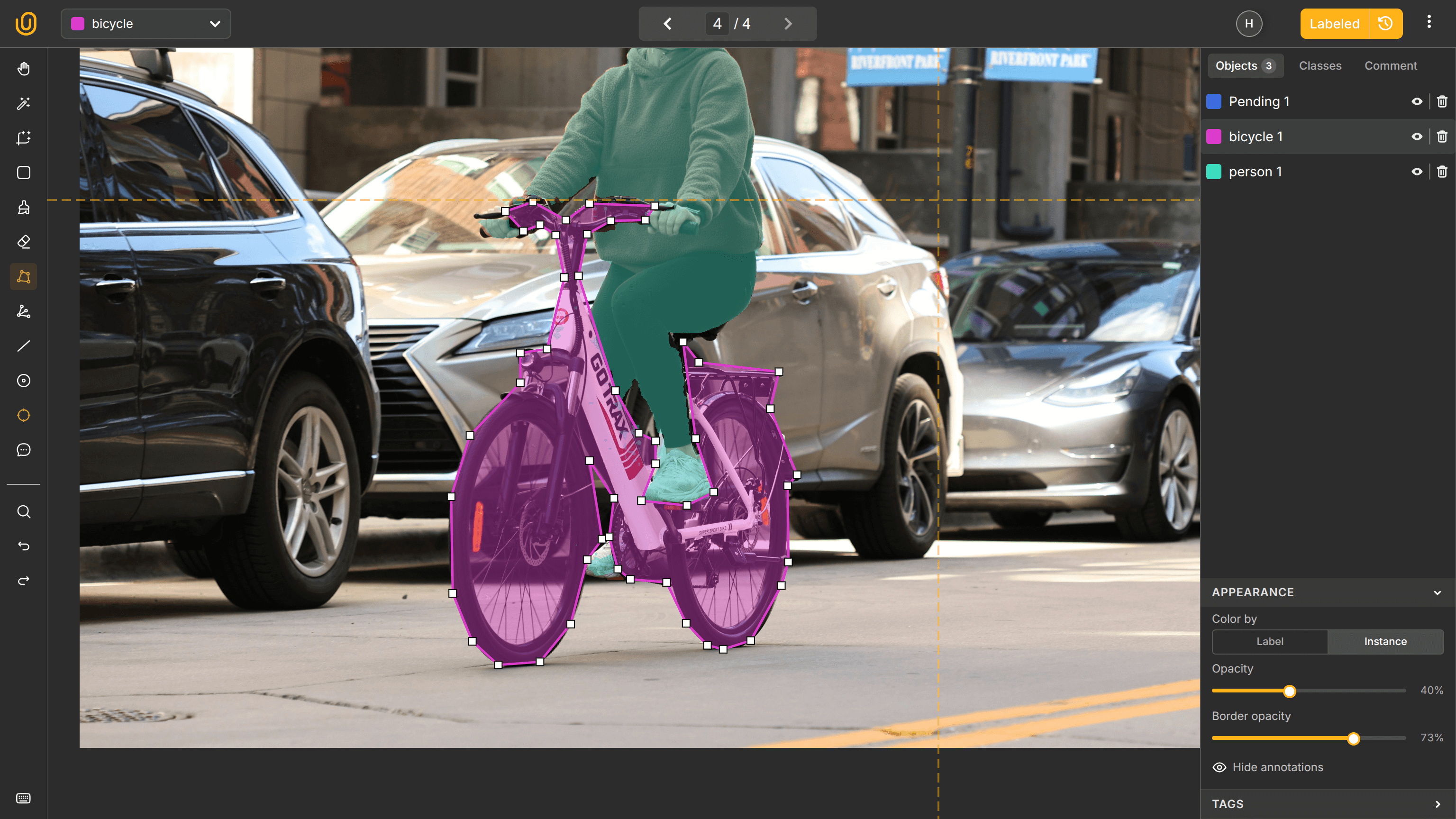

Bounding boxes remain common for object detection. They provide coarse localization and class labels. However, thin or irregular objects such as scooters often lead to boxes that include large background areas, reducing spatial precision and IoU.

Instance segmentation addresses this limitation by outlining exact object contours. This improves performance in crowded scenes with heavy overlap and occlusion. Semantic segmentation supports infrastructure-level analysis. Labeling bike lanes, sidewalks, curbs, and road surfaces allows models to reason about safe paths and spatial constraints.

Keypoint annotation enables rider pose estimation. Head orientation, hand placement, and body posture can inform risk prediction and intention modeling. 3D bounding boxes and LiDAR annotations are increasingly relevant in autonomous driving systems. Reliable 3D localization is critical for collision avoidance. Video annotation adds temporal context, enabling trajectory prediction and behavior understanding.

Each annotation type serves a specific modeling goal. Mature datasets often integrate multiple label formats to support multimodal training.

Computer Vision Use Cases for Micromobility

Micromobility annotation supports several production systems.

Urban traffic monitoring systems analyze micromobility flows to guide infrastructure planning. Counting e-scooters and tracking usage patterns require accurate detection and tracking across time.

ADAS and autonomous driving systems must detect and predict the trajectories of scooters and bicycles. These vehicles change direction rapidly and occupy narrow gaps between cars. High recall and precise localization directly reduce collision risk. Research highlights the importance of adapting detection pipelines for these objects.

Fleet management platforms use computer vision to detect improperly parked scooters or identify safety violations, such as lack of helmets in certain countries. Computer vision can support compliance workflows and operational efficiency.

Urban planners rely on aggregated vision data to evaluate whether bike lanes reduce conflicts and improve safety outcomes.

Guess what? In all cases, annotation quality determines system performance and reliability. Poor labeling leads to biased models, undercounted vehicles, and unsafe predictions, all increasing risks for riders and passengers.

Challenges in Micromobility Annotation

Micromobility introduces technical and operational challenges that traditional vehicle datasets did not fully address.

Small Size

Small object size is a primary issue. A distant e-scooter may occupy only a few dozen pixels in a high-resolution frame. Standard anchor configurations and feature pyramid strategies usually struggle to detect these objects consistently with precision.

Occlusion

Occlusion is frequent. A scooter may be partially hidden behind a parked car, vegetation, or another rider. Data annotation teams must define clear policies for labeling partially visible objects and handling truncation. Consistency across annotators is critical. The current best practice is to label occluded objects as if they were in full view.

Shape Variety

Shape variability adds complexity. A rider standing upright on a scooter produces a thin vertical silhouette. A cyclist leans forward. Cargo bikes extend horizontally. Backpacks, delivery boxes, and passenger riders alter geometry further. Models trained primarily on rectangular car profiles often underperform on these irregular shapes.

Class Ambiguity

Class ambiguity also arises. A pedestrian pushing a scooter differs from riding. Without behavioral attributes, models may collapse these scenarios into a single category, reducing interpretability. Without interpretability, detection and classification itself are not worth a lot in micromobility labeling.

Environmental Variability

Environmental variability compound annotation difficulty. Rain, low light, glare, and shadows degrade visual clarity. Clear guidelines are necessary to maintain consistency across conditions.

Motion Blur

Motion blur complicates video annotation. Fast-moving riders produce blurred frames in different weather conditions, making precise box placement and segmentation harder.

Edge Cases

Countably infinite edge cases exist, i.e. they are unavoidable. Two riders on one scooter. A scooter lying on the ground. A rider carrying oversized cargo. Explicit labeling of such scenarios prevents confusion during training.

Without structured QA processes and precise annotation guidelines, label drift becomes likely, degrading dataset quality, and the final computer vision model.

Best Practices for Micromobility Annotation

Micromobility annotation is still evolving, but several practices have emerged as effective:

- Define a Detailed Taxonomy: A detailed taxonomy is essential. Avoid overly generic classes. Distinguish between bicycle, e-bike, e-scooter, rider, and pedestrian-with-scooter. Define how to handle ambiguous behaviors.

- Use Temporal Consistency: Use video-based annotation when possible. Video-based annotation reduces frame-to-frame label noise and strengthens tracking supervision, while temporal consistency improves video frame consistency.

- Combine Bounding Boxes and Segmentation: Combining bounding boxes with segmentation increases spatial precision. Segmentation is particularly valuable for thin structures and heavily occluded scenes.

- Balance the Dataset: Dataset balance matters. Include diverse urban layouts, rider demographics, lighting conditions, and weather scenarios to improve generalization.

- Leverage semi-automatic tools: AI-assisted pre-annotation accelerates workflows. Model-generated suggestions reduce manual effort, while human validation preserves accuracy.

- Implement Quality Control: Robust quality control is non-negotiable. Multi-stage review processes, peer validation, and targeted checks for rare edge cases reduce systematic bias and inconsistency. A typical QA process might look like → Detect inconsistent labeling → Catch misclassified behaviors → Validate rare edge cases → Fix mistakes.

A structured annotation platform provides many of these processes out of the box. Speaking of them, let's talk about Unitlab AI, a fully-automated data platform.

Unitlab AI for Micromobility Annotation

Unitlab AI provides an integrated environment for building high-quality micromobility datasets. It supports bounding boxes, polygon segmentation, keypoints, and video annotation within a single collaborative interface.

With Unitlab AI, you can define custom ontologies for e-scooters, e-bikes, cargo bikes, rider states, and textual elements such as license plates or fleet identifiers through OCR labeling. Hierarchical classes and attributes can be configured without additional engineering work.

AI-assisted tools such as Magic Crop and SAM-based Magic Touch accelerate large-scale labeling. Models generate initial predictions, and annotators refine them. Reviewers validate outputs within the same system. This human-in-the-loop approach reduces cost and project time while maintaining consistency across thousands of frames.

Dataset management features include cloning, versioning, and structured export options compatible with common object detection, segmentation, tracking, and OCR pipelines. This enables direct integration into training frameworks and reduces operational overhead.

For teams building perception systems for autonomous vehicles, traffic monitoring, or mobility platforms, Unitlab AI provides reliability and scalability.

Future Trends in Micromobility Computer Vision

Although predicting future trends in technology is notoriously hard, we can analyze the micromobility environment and make a few educated guesses on micromobility annotation in the coming years:

Micromobility perception will likely extend beyond 2D image analysis. Multi-sensor fusion combining LiDAR, radar, and cameras will improve detection of small and partially occluded objects.

Self-supervised learning (SSL) may reduce reliance on fully labeled datasets. However, high-quality annotations will remain essential for evaluation, benchmarking, and fine-tuning.

Behavior prediction models will increasingly depend on fine-grained annotations capturing posture, intent, and interaction dynamics.

Regulatory pressure may also influence dataset standards. As cities adopt stricter safety rules, perception systems must meet defined performance thresholds for vulnerable road users, including micromobility participants.

Conclusion

Micromobility is reshaping urban transportation and forcing computer vision systems to adapt. Small object size, geometric variability, and dynamic behavior challenge traditional perception pipelines.

Accurate micromobility annotation provides the foundation for detection, tracking, behavior prediction, and compliance systems. It requires structured ontologies, consistent guidelines, scalable tooling, and rigorous quality control.

Unitlab AI streamlines this process by combining flexible labeling formats, AI-assisted workflows, OCR support, and collaborative validation. As micromobility adoption expands, robust annotation pipelines will become a core component of modern computer vision systems.

Explore More

- Bounding Box Essentials in Data Annotation with a Demo Project

- Guide to Pixel-perfect Image Labeling with a Demo Project [2026]

- What is LiDAR: Essentials & Applications

References

- Khalil Sabri et. al. (May 28, 2024). Detection of Micromobility Vehicles in Urban Traffic Videos. Conference on Robots and Vision: Source

- McKinsey & Company (Apr 29, 2025). What is micromobility? McKinsey & Company: Source

- Inna Nomerovska (Oct 07, 2021). Making E-scooters Safer with Data Annotation. Keymakr: Source

- Tatiana Verbitskaya (Jan 30, 2026). Micromobility annotation. Keymakr: Source

- Wikipedia (no date). Micromobility. Wikipedia: Source