In this post, we’ll walk through how to automate the annotation process for vehicle instance segmentation using the YOLOv8-Seg model. With this model, we can efficiently segment seven types of vehicles: bicycles, cars, motorcycles, airplanes, buses, trains, and trucks.

Let’s dive in how to set up and deploy the YOLOv8-Seg model locally and get started with automated annotation.

Deployment of the YOLOv8 Segmentation Model

In our previous blog, we explored how to efficiently deploy the YOLOv8-Seg model on a server using TensorRT and ONNX, and we also open-sourced the deployment codebase.

The YOLOv8-Seg model we’re using comes from the Ultralytics YOLOv8 repository, trained on the COCO segmentation dataset. This model can detect and segment 80 different classes, but for our task, we’ll filter predictions to focus on vehicles.

To deploy this model, we’ll need to set up a local environment. We can deploy the model to either CPU or NVIDIA GPU. Whether your machine supports NVIDIA GPU or CPU, the deployment process will be similar.

Repository Setup

Clone this repository to your machine:

git clone https://github.com/teamunitlab/yolo8-segmentation-deploy.git

cd yolo8-segmentation-deploy

Deploying the Model

If you’re deploying the model to a machine with an NVIDIA GPU, ensure that the GPU drivers and CUDA libraries are properly installed and configured. Otherwise, you can proceed with a CPU-only deployment.

To deploy the model on CPU using Docker, run:

docker-compose -f docker-compose-cpu.yml up -d --build

This command creates the local environment with all the requirements within Docker, which may take several minutes to finish.

Creating API Endpoints

We’ve also prepared API endpoints for segmenting vehicle instances using the YOLOv8-Seg model:

@app.route("/api/yolo8/vehicle-segmentation", methods=["GET", "POST"])

def run_vehicle_segmentation():

# Model inference & class extraction logic here

return results

To test the endpoint, you can use cURL:

curl -X POST http://localhost:8080/api/yolo8/vehicle-segmentation \

-H "Content-Type: application/json" \

-d '{"src":""example.com/test-image.jpg""}'

The src expects a url of the image being tested.

Depending on the provided image, the output shows which classes the model predicted. In our case, it predicted car, motorcycle, airplane, bus, and truck classes.

"classes":["bicycle","car","motorcycle","airplane","bus","train","truck"],

"predicted_classes":[1,2,3,4,6]

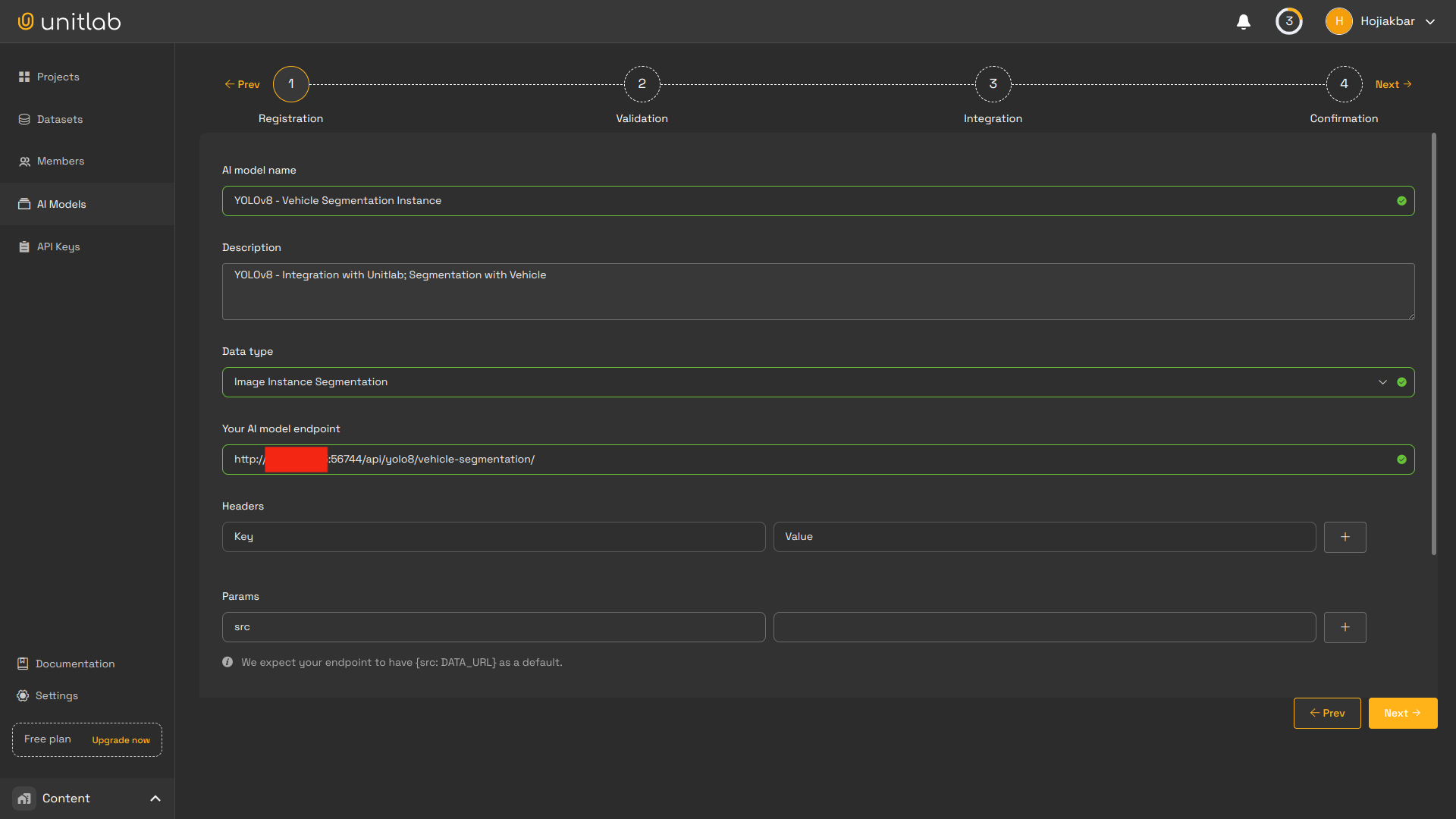

Model Integration into Unitlab

Integrating your AI models into Unitlab serves three key purposes:

-

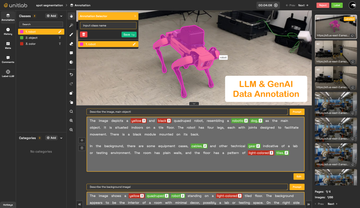

Visualization of Model Predictions: Effortlessly visualize the predictions from your pre-trained models, such as YOLOv8, within Unitlab’s interface.

-

Automated Data Annotation: Leverage your pre-trained models for data annotation in Unitlab Annotate using tools like batch auto-annotation and crop auto-annotation to streamline the annotation process.

-

Model Evaluation: Evaluate the performance of your models on specific datasets uploaded to Unitlab and compare their results side-by-side for deeper insights.

Comprehensive documentation is available to guide you through integrating your AI models into Unitlab. Now, let’s proceed with integrating the Vehicle Instance Segmentation endpoint we deployed earlier.

After completing the integration using the provided documentation, your AI model will be ready for visualization, automated data annotation, and evaluation within Unitlab.

1. Visualization of Model Predictions

After integrating your model into Unitlab, you can assess its performance by visualizing the results on your test data.

2. Automated Data Annotation

In Unitlab, your AI models can significantly streamline the annotation process using advanced tools, such as batch auto-annotation and crop auto-annotation.

These features simplify working with large datasets, helping you prepare data for machine learning projects more efficiently. By integrating your models into Unitlab, you can enhance productivity, reduce manual effort, and improve dataset accuracy.

3. Model Evaluation

When working with multiple versions of AI models, Unitlab simplifies the process of finding the best-performing one. There’s no need to write custom evaluation scripts—once your AI model is integrated and your benchmark datasets are uploaded, you can effortlessly manage and review evaluation reports within the platform.

Steps to Evaluate Your Models in Unitlab:

- Integrate your AI models into Unitlab.

- Upload your benchmark dataset using Unitlab’s CLI or Python SDK.

- Clone the uploaded dataset, create a project, and select the integrated AI model for evaluation.

- Compare the results of different AI models or benchmark them against the ground truths in the dataset.

Unitlab makes it easy to evaluate and compare your models, helping you identify the most accurate and reliable version for your project.

Conclusion

In conclusion, integrating AI models with Unitlab enhances efficiency and precision across workflows. With seamless connections between automated data annotation, dataset management, and model evaluation, developers can minimize time spent on manual tasks and focus more on refining and optimizing their models.

This streamlined approach reduces the risk of human error, improves output quality, and accelerates development cycles. As a result, advanced AI solutions can be deployed faster and more effectively, ensuring a smoother transition from concept to production.