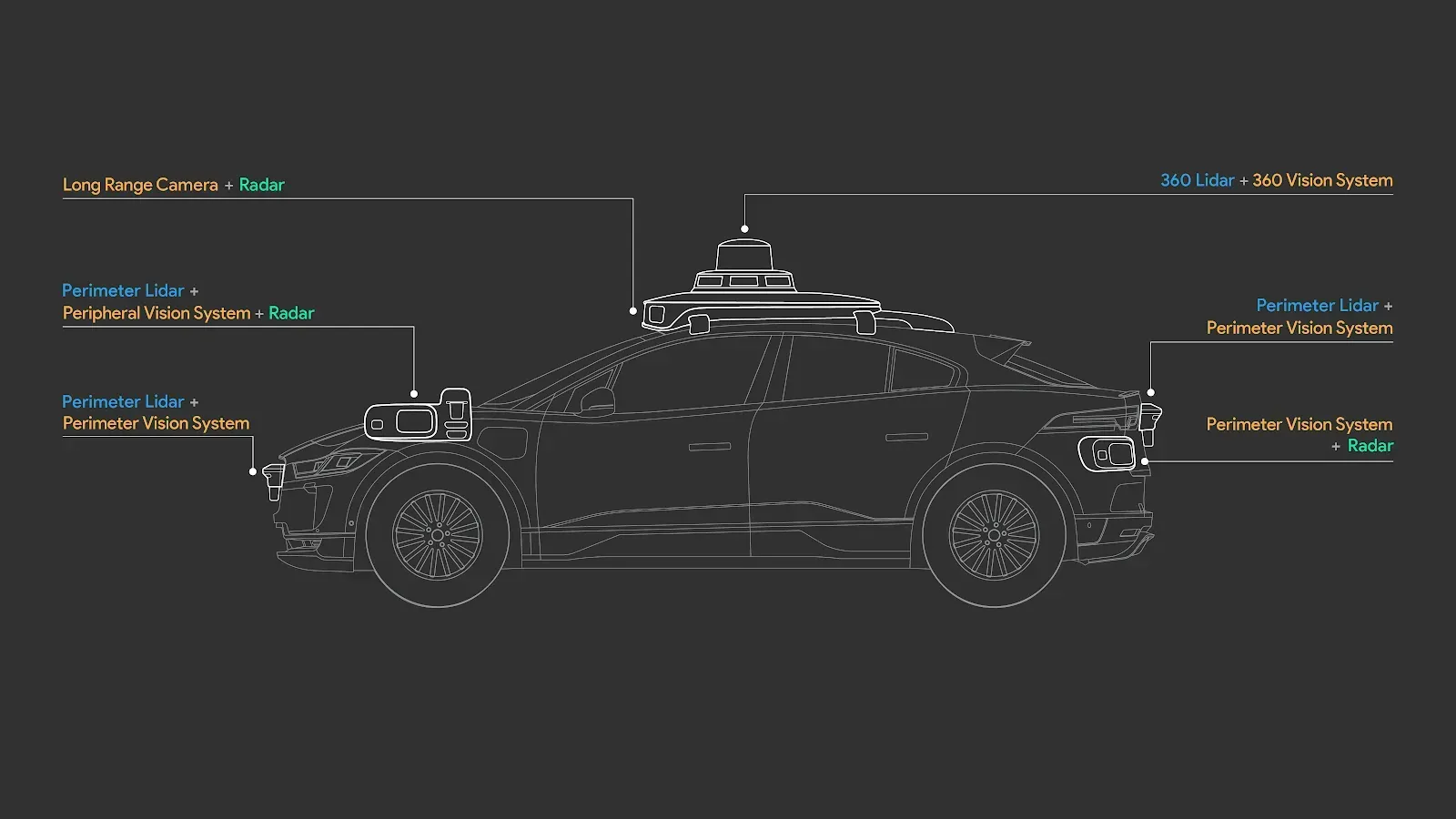

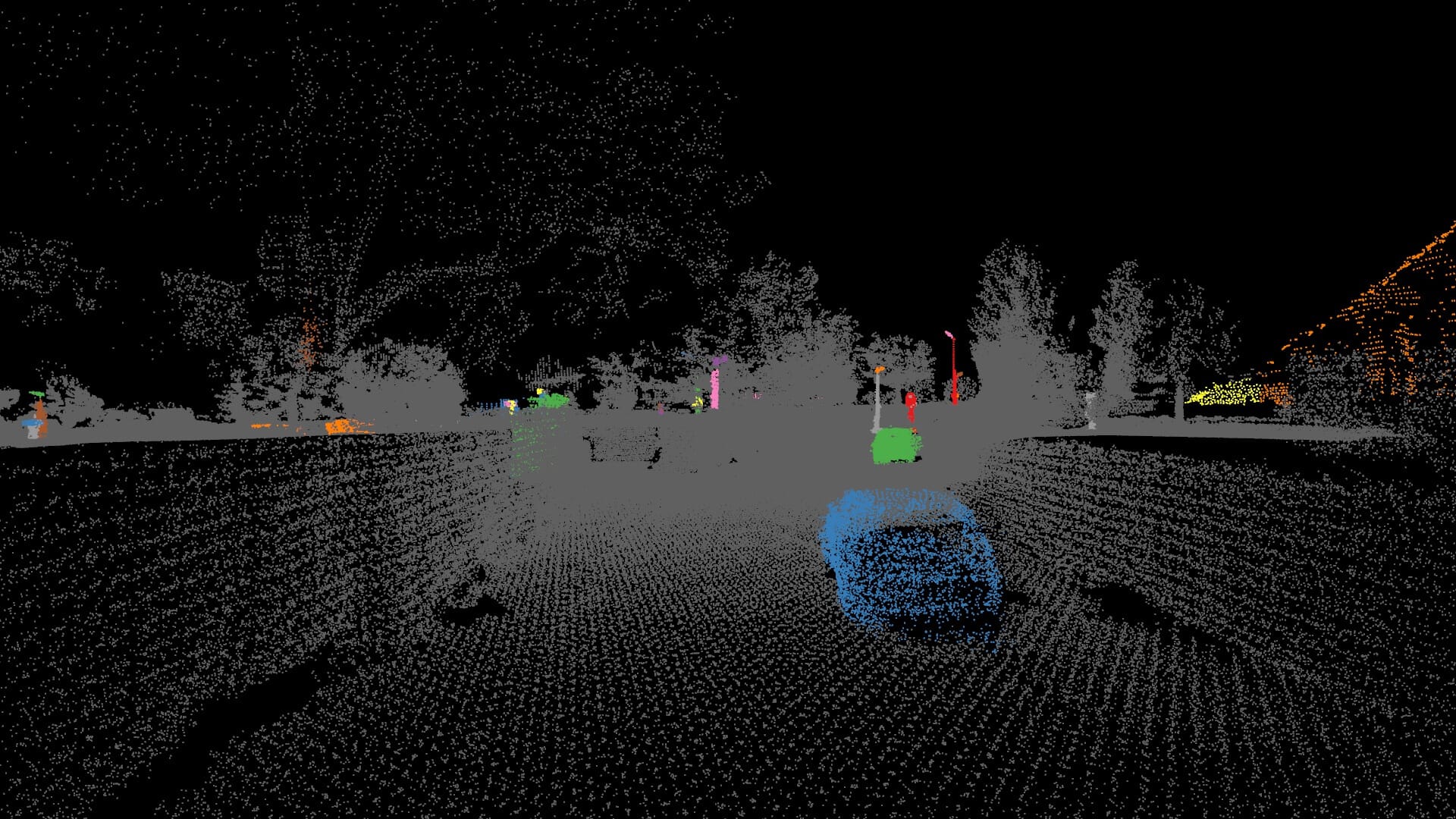

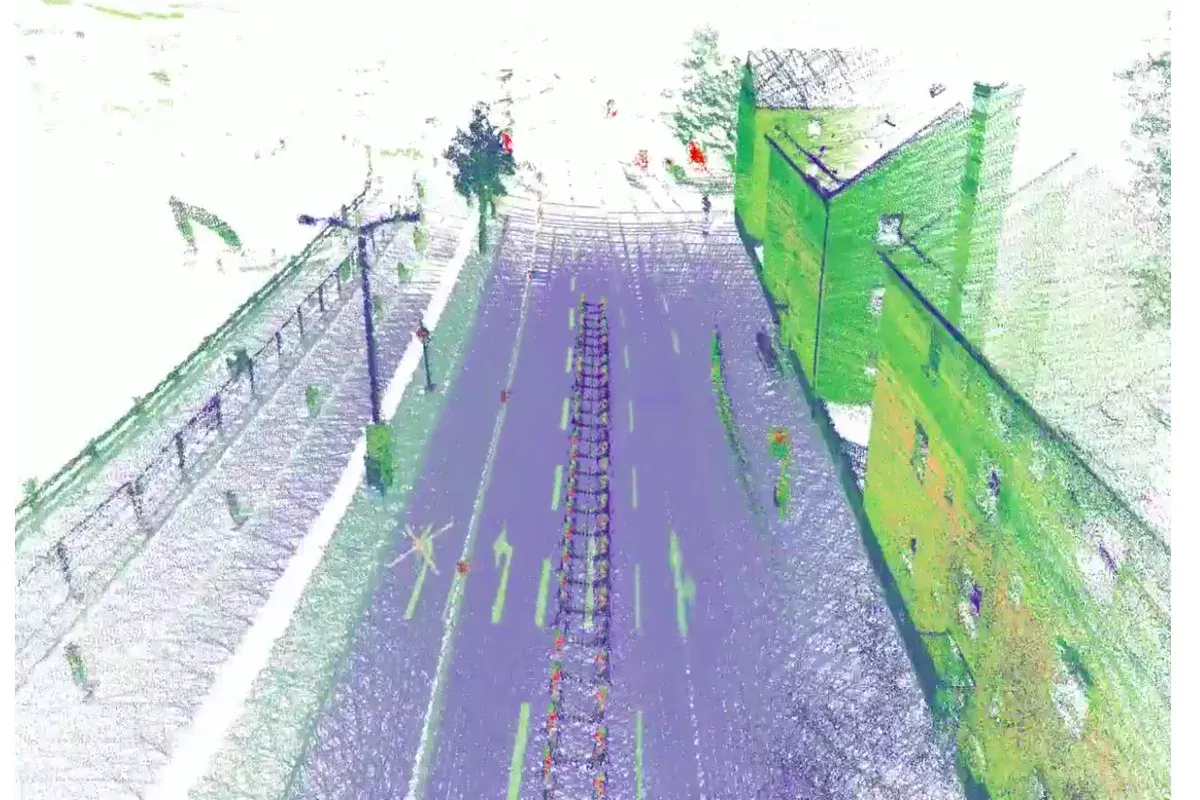

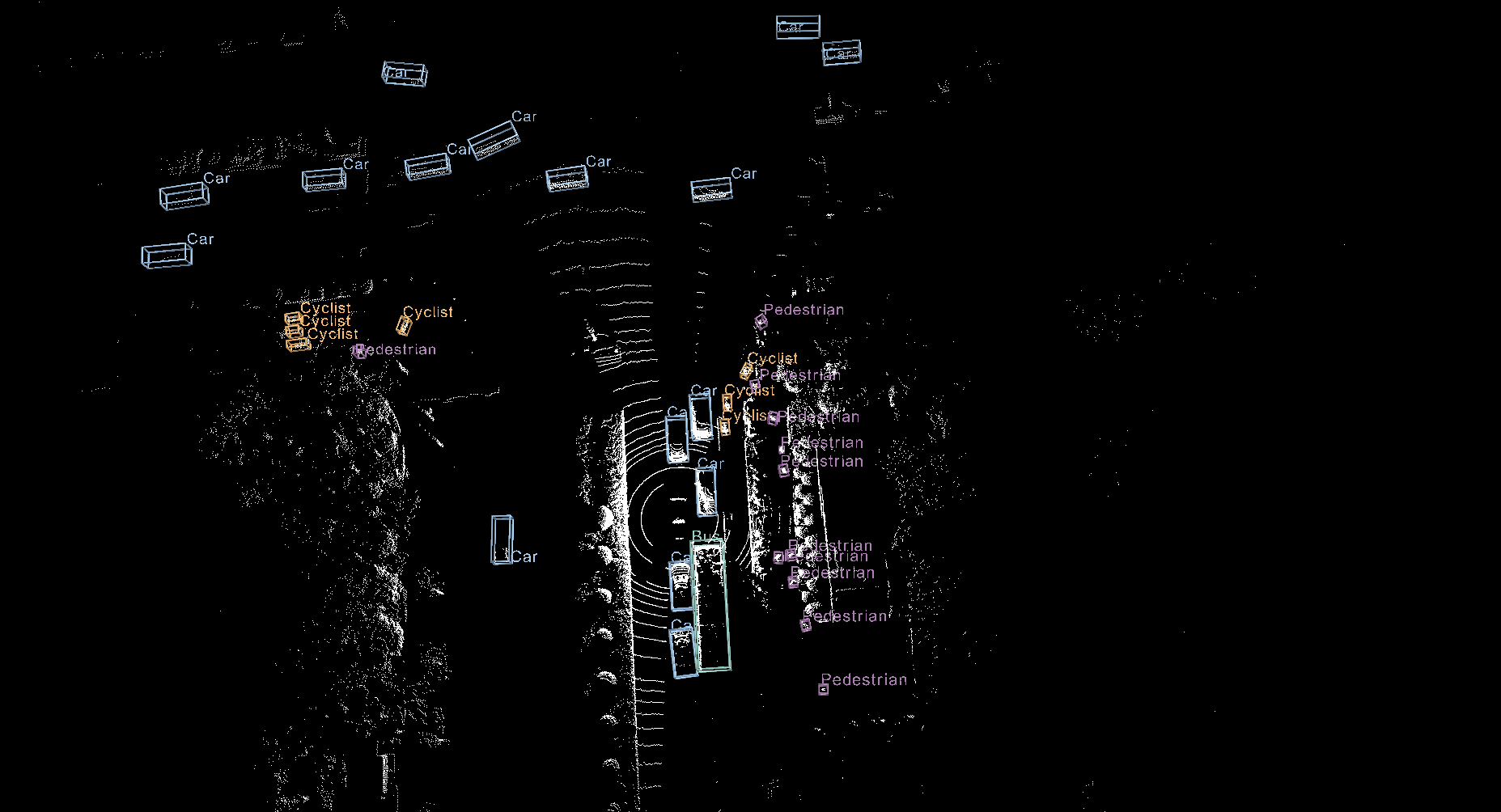

Autonomous driving systems, such as those used by Tesla or Waymo, require high-quality spatial data for training and testing. LiDAR (Light Detection and Ranging) technology provides precise 3D measurements of the environment by emitting laser beams, calculating the time of flight of laser pulses, and determining their location to construct a 3D point cloud consisting of billions of points. These point clouds undergo several processing steps to be usable for self-driving vehicles.

LiDAR Essentials and Applications

Constructing a high-quality, consistent LiDAR dataset for self-autonomous cars is a difficult engineering problem that also requires significant time and monetary resources. Fortunately, a number of public LiDAR datasets exist specifically designed for this use case. They eliminate the high cost of data collection, data annotation, and hardware maintenance, enabling researches to focus on core engineering problems, such as improving vehicle safety and accuracy.

In this article, we are going to discuss 10 popular LiDAR datasets. Let's dive in.

Top 10 Open-Source LiDAR Datasets

How does one construct a public, real LiDAR dataset for autonomous vehicles in urban environments in different weather conditions? The intuition is simple, yet the implementation is hard and different: you get a special car equipped with LiDAR, stereo, and 360 RGB cameras, as well as GPS systems, and drive through the busy streets. That's it.

But how you actually design and run this car, how you annotate raw 3D point clouds, and how you choose annotation and dataset formats differentiate your LiDAR dataset. The datasets below differ not only in their format or size, but also in collection.

Here is a summary table below:

| Name | Location | Year | Annotation Format | Size | License |

|---|---|---|---|---|---|

| KITTI | Germany | 2012 | 3D/2D Boxes, Semantic Seg. | 15k frames, 80k objects | CC BY-NC-SA 4.0 |

| nuScenes | USA, Singapore | 2019 | 3D Boxes, Attribute labels | 1,000 scenes, 1.4M boxes | CC BY-NC-SA 4.0 |

| Waymo Open | 6 US Cities | 2019 | 3D/2D Boxes, Key-points | 12 million 3D labels | Custom Non-Commercial |

| Argoverse 2 | 6 US Cities | 2021 | 3D Cuboids, HD Maps | 1,000 labeled scenarios | CC BY-NC-SA 4.0 |

| Toronto-3D | Canada | 2020 | Semantic Segmentation | 78.3 million points | CC BY-NC-SA 4.0 |

| PandaSet | USA | 2021 | 3D Cuboids, Semantic Seg. | 103 scenes, 28 classes | Apache 2.0 |

| ApolloScape | China | 2018 | 3D Cuboids, Disparity Maps | 140k frames, 1k km road | Custom Non-Commercial |

| Oxford RobotCar | UK | 2014 | 3D Cuboids, Semantic Seg. | 1,000 km recorded driving | CC BY-NC-SA 4.0 |

| A2D2 | Germany | 2020 | 3D Boxes, Semantic Seg. | 41k frames, 38 categories | CC BY-ND 4.0 |

| ONCE | China | 2021 | 3D Object Detection | 1 million frames (15k annotated) | CC BY-NC-SA 4.0 |

1. KITTI

- Year: 2012, 2015

- Geography: Karlsruhe, Germany

- Sensors: 2 grayscale cameras, 2 color cameras, Velodyne LiDAR, GPS/IMU

- Data Format: BIN, PNG and JSON files

- Annotations: 3D/2D object detection, tracking, semantic segmentation

- Size: 7481 point cloud files, 7,518 testing frames, and ~80K labeled objects

- License: CC BY-NC-SA 4.0

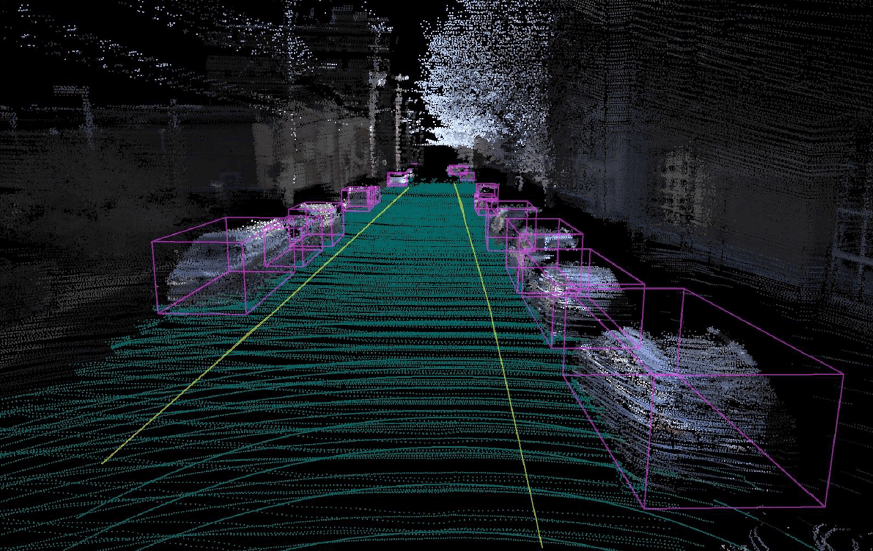

The KITTI Vision Benchmark Suite is a pioneering dataset (2012, 2015) developed by Karlsruhe Institute of Technology

and Toyota Technological Institute at Chicago. It is widely used for benchmarking autonomous driving algorithms and ML models. The dataset gave rise to other modern, deep LiDAR datasets, such as KITTI-360.

KITTI is an open-source dataset, but you need to create an account and wait for approval before you can gain access. Note that the 3D cuboid annotations are only labeled top-down, meaning they are not accurate in Z-axis. Moreover, only objects visible in the cameras are labeled.

2. nuScenes

- Year: 2019

- Geography: Boston (USA), Singapore

- Sensors: 6 cameras, 5 RADARs, 1 LiDAR, GPS/IMU

- Data Format: BIN, PNG and JSON files

- Annotations: 3D/2D object detection, tracking, semantic segmentation

- Size: 1000 scenes of 20s each, 1,400,000 camera images, 390,000 lidar sweeps, 1.4M 3D bounding boxes manually annotated for 23 object classes, 1.1B lidar points manually annotated for 32 classes

- License: CC BY-NC-SA 4.0

nuScenes is a high-resolution, multi-modal sensor data collected in diverse weather and lighting conditions with 360-degree coverage. It is the first dataset to include Radar and LiDAR fusion data.

It covers a total of 35K labeled keyframes across 850 scenes from Boston and Singapore, and consequently left versus right hand traffic. You need to create an account before you can access the dataset.

3. Waymo Open Dataset

- Year: 2019

- Geography: 6 US cities

- Sensors: 5 LiDARs and 5 cameras

- Data Format: Sharded TFRecord format files containing protocol buffer data

- Annotations: Wide variety covering tracked 2D/3D objects, key-points & segmentation for 2D/3D

- Size: 3 datasets, 12 million 3D labels for vehicles and pedestrians

- License: Custom non-commercial license

The Waymo Open Dataset covers a wide variety of annotations over a large number of frames in different weather conditions and locations: downtown, suburban, daylight, night, rain.

It is composed of three datasets: the Perception Dataset with high resolution sensor data and labels for 2,030 segments. The Motion Dataset with object trajectories and corresponding 3D maps for 103,354 segments, and the End-to-End Driving Dataset with camera images providing 360-degree coverage and routing instructions for 5,000 segments.

4. Argoverse 2

- Year: 2021

- Geography: 6 US cities

- Sensors: 2 Lidar sensors, 7 ring cameras and 2 stereo cameras

- Data Format: Point cloud files and annotations as as Apache feather files, Raw images as PNG files

- Annotations: Annotated HD maps, ground points, tracked 3D cuboids, motion forecasting and map change detection data

- Size: 4 open-source datasets

- License: CC BY-NC-SA 4.0

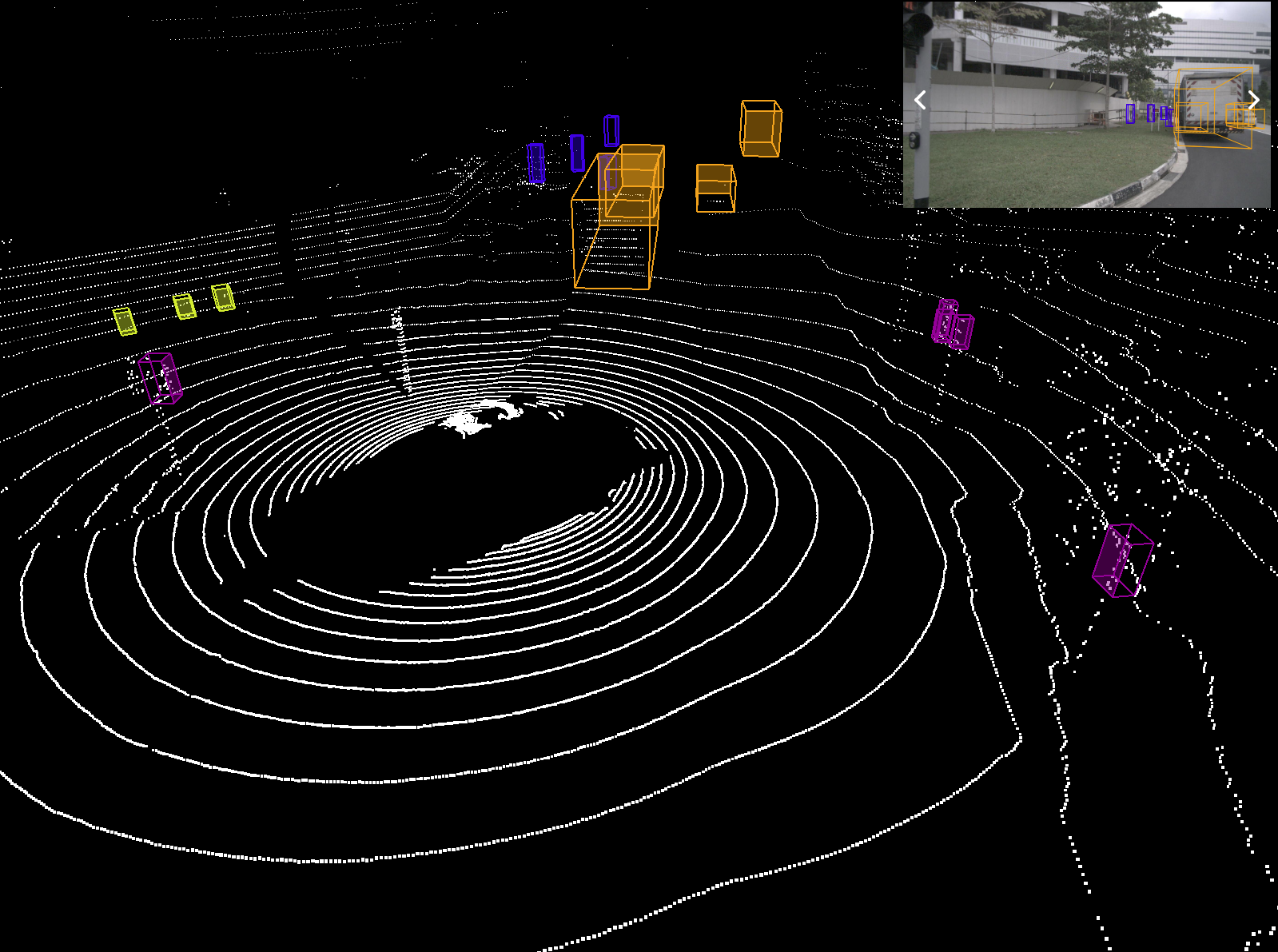

Argoverse 2 is a comprehensive dataset for a number of tasks including 3D object detection, tracking, detecting changes in HD maps and motion forecasting. The Argoverse 2 Sensor Dataset contains 1000 3D labeled scenarios of 15 seconds each.

The Argoverse 2 Motion Forecasting Dataset has 250,000 scenarios with trajectory data for many object types, while The Lidar Dataset contains 20,000 unannotated lidar sequences.Finally, The Map Change Dataset provides 1,000 scenarios, 200 of which depict real-world HD map changes.

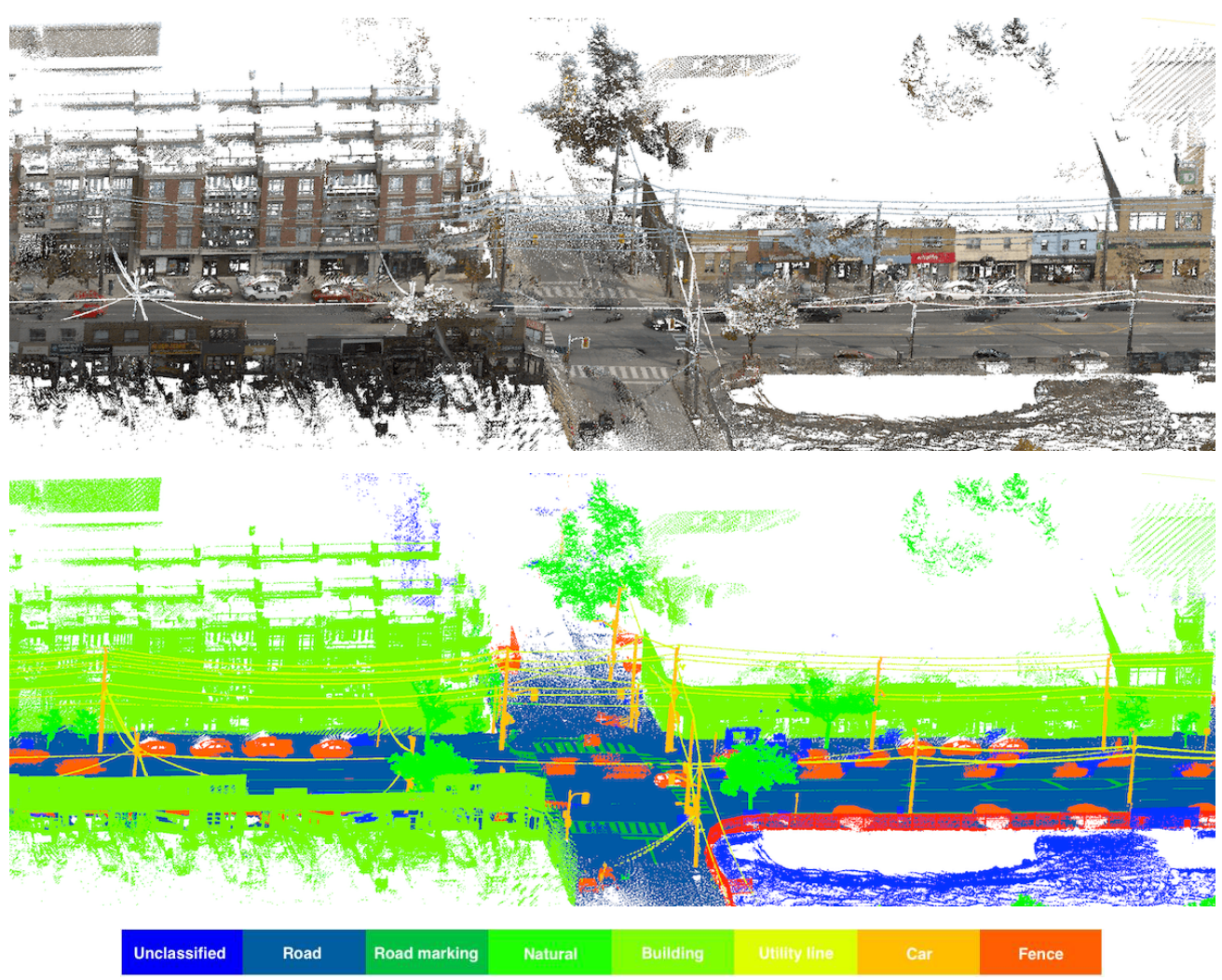

5. Toronto-3D

- Year: 2020

- Geography: Toronto, Canada

- Sensors: 1 LiDAR

- Data Format: Point cloud files as BIN files, annotations in PLY format

- Annotations: Semantic segmentation of Lidar data

- Size: 1 km of road + 78.3 million labeled points

- License: CC BY-NC-SA 4.0

Toronto-3D is a large-scale dataset for semantic segmentation of urban outdoor scenes. It was collected using a Teledyne Optech Maverick mobile mapping system. The dataset covers approximately 1km of Avenue Road in Toronto, Canada. It contains 78 million points labeled into eight categories.

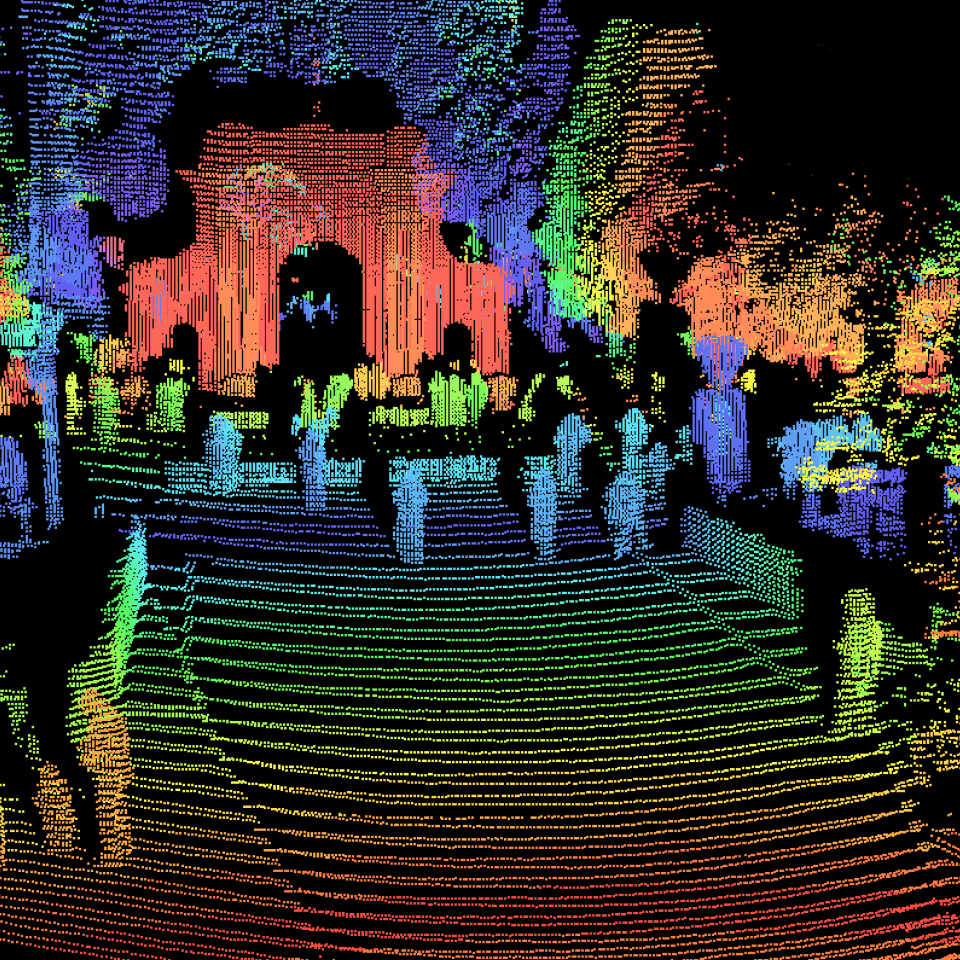

6. PandaSet

- Year: 2021

- Geography: San Francisco, California

- Sensors: 360-degree 1 mechanical LiDAR, 360-degree 1 solid-state LiDAR, 6 cameras, On-board GPS/IMU

- Data Format: Pickle format for LiDAR and JPG for images.

- Annotations: 3D cuboid and semantic segmentation data

- Size: 48,000 camera images, 16,000 LiDAR sweeps, 103 scenes of 8s each, 28 annotation classes, 37 semantic segmentation labels.

- License: Apache License, Version 2.0

PandaSet includes 100 scenes of 8 seconds each. The dataset provides complex labels like smoke, vegetation, and construction zones. It is useful for studying sensor-specific noise patterns.

Created by Hesai and Scale AI, PandaSet is unique because it features two different types of LiDAR: a mechanical spinning LiDAR and a solid-state LiDAR. This allows researchers to study how different sensor architectures affect perception.

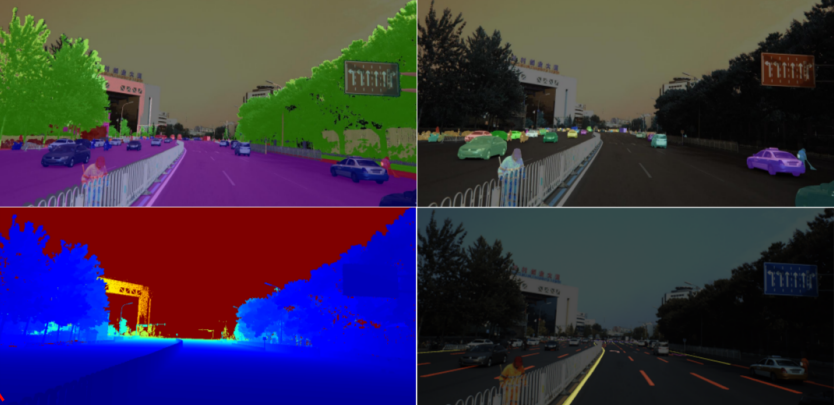

7. ApolloScape

- Year: 2018

- Geography: Beijing, China

- Sensors: 2 lidars

- Data Format: Point cloud files as PCD files, images in PNG format and annotations as JSON files

- Annotations: 3D cuboid annotations for Lidar, disparity maps for sterio and lane detection

- Size: 100K image frames, 80k lidar point cloud and 1000km trajectories

- License: Custom non-commercial license

Baidu’s Apolloscape consists of datasets for 3D object detection, lane detection, disparity maps for stereo images, scene inpainting, and trajectory prediction.

ApolloScape offers high-resolution 3D point cloud segmentation. It includes over 140,000 frames of sensor data. The dataset is known for its high density of non-motorized vehicles. It provides sub-centimeter accuracy for static environmental maps.

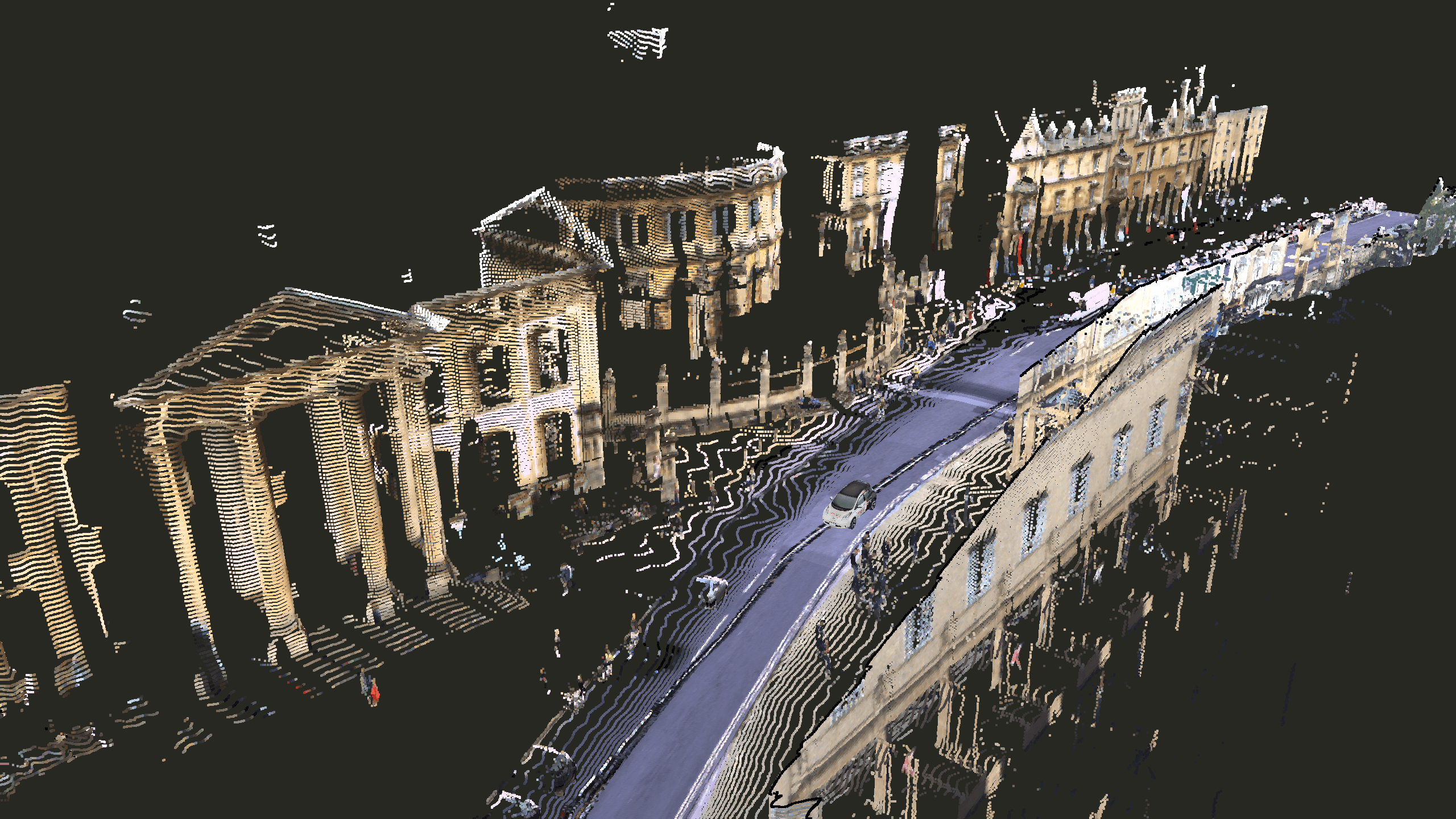

8. Oxford RobotCar Dataset

- Year: 2014-2015

- Geography: Oxford, UK

- Sensors: 6 cameras, LiDAR, GPS, INS

- Data Format: Images and Radar scans as PNG, LiDAR as BIN

- Annotations: 3D cuboid annotations and semantic segmentation

- Size: 1000km of recorded driving with over 20 million images

- License: CC BY-NC-SA 4.0

This dataset captures a single 10km route over 100 times across a year. It records variations in lighting, weather, and traffic. The data is essential for testing localization and mapping (SLAM) algorithms. It uses two LMS-151 2D LiDARs and one HDL-32E 3D LiDAR.

9. A2D2 Dataset

- Year: 2020

- Geography: Germany (3 cities)

- Sensors: 5 lidars and 6 cameras

- Data Format: Point cloud files as npz(numpy zip) files, images in PNG format and annotations as JSON files

- Annotations: Lidar and Image semantic segmentation and 3D cuboid annotations and sensor data

- Size: 40K images and point cloud files for semantic segmentation; 12K point cloud frames for 3D object detection.

- License: CC BY-NC-SA 4.0

The Audi Autonomous Driving Dataset (A2D2) provides 41,277 frames with 3D bounding boxes. It includes three Velodyne VLP-16 sensors. The labels are provided for 38 categories. It features a unique semantic segmentation for both 2D and 3D data.

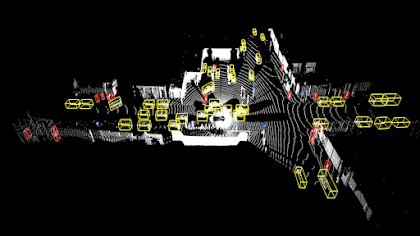

10. ONCE

- Year: 2021

- Geography: China (urban areas)

- Sensors: LiDAR and 7 RGB cameras

- Data Format: PCD, PNG and JSON files

- Annotations: Partially annotated for 3D object detection

- Size: 1 Million LiDAR frames, 7 Million camera images, 15k fully annotated scenes with 5 classes

- License: CC BY-NC-SA 4.0

ONCE (One Million Scenes) is one of the largest autonomous driving datasets, focusing on real-world urban scenes. This dataset targets the development of self-supervised learning. It provides 1 million LiDAR frames from various Chinese cities. Only a subset of 15,000 frames is fully annotated. This structure forces models to learn from unlabelled spatial features.

Conclusion

Open-source LiDAR datasets have transformed AV research significantly, allowing startups and universities to find novel ideas and put them into action. By providing diverse geographic, weather, and traffic conditions, these datasets ensure that the next generation of autonomous vehicles is safe, reliable, and capable of navigating the complexities of the real world.

Explore More

- What is LiDAR: Essentials & Applications

- LiDAR Annotation and Dataset

- LiDAR Dataset and Annotation Formats

References

- Alex Nguyen (Jul 30, 2021). 15 Best Open-Source Autonomous Driving Datasets. Medium: Source

- Mindkosh (no date). Publicly available Lidar datasets for Autonomous Vehicle use-cases. Mindkosh: Source

- Tobias Cornille (Apr 25, 2022). 10 Lidar Datasets for Autonomous Driving. Segments AI: Source