Most AI systems today live in the digital world. They generate text, classify images, detect objects, and recommend products. But they do not interact with the physical world.

Physical AI is different.

It connects the digital and physical worlds to enable machines interact with their surroundings. While traditional AI runs on servers, physical AI systems can sense, decide, act, and learn in real world environments.

In this guide, here’s what we will cover:

- What is physical AI?

- The perception stack and components of physical AI

- How do perception stack and components work together in physical AI?

- What data is required for physical AI?

- The role of data annotation in physical AI

- Failure modes and technical challenges in physical AI

- Physical AI use cases

- The future of physical AI

Building a digital world for physical AI depends on high-quality datasets. But the quality of your data annotation matters just as much.

Unitalb AI helps you annotate your data 15x faster and more accurately using advanced auto-annotation tools.

What is Physical AI?

Physical AI refers to intelligence that enables autonomous systems to perceive, understand, and reason about the physical world, and to perform and control real-world actions.

For example, a self-driving car uses cameras and LiDAR to detect nearby cars and pedestrians, applies machine learning to decide on steering/braking, and then executes those actions through its motors.

Simply put, physical AI represents the convergence of digital intelligence and robotic control, machines that can interact in the same space we live in.

The Perception Stack and Components of Physical AI

A physical AI system is built on a perception stack that integrates several foundational technologies. Each plays a key role in the sense–decide–act loop:

Sensors: Collecting Environmental Information

Sensors collect raw information from the physical environment. Common sensors include:

- Cameras: These provide visual perception and object recognition. They allow the system to recognize objects, identify defects on a production line, and interpret visual cues like human gestures.

- LiDAR: Light detection and ranging sensors use laser pulses to measure distance and create detailed 3D maps. LiDAR is essential for spatial mapping and navigation in complex environments.

- Environmental Sensors: Track temperature, pressure, and vibration to monitor the health of physical systems.

- Acoustic Sensors: Used for sound-based monitoring and detecting mechanical anomalies.

- Radar and Proximity Sensors: Radar provides velocity-aware signals, while proximity sensors allow for fine-tuned movements in tight spaces.

Actuators: Executing Physical Actions

Actuators turn AI agent decisions into physical movement:

- Robotic Arms: Perform manipulation, grasping, and assembly in manufacturing.

- Motors and Drives: Provide movement and precise positioning for mobile robots.

- Valves and Switches: Control fluid or air flow in industrial process data environments.

- Conveyors: Handle material transport in automated logistics.

Simply put, actuators translate digital commands into motion or forces. For example, after an AI model classifies an object on a belt, a robotic arm actuator can adjust its grip to pick it up.

AI Algorithms: Processing and Learning

AI algorithms process information, learn from experience, and make decisions that drive physical actions.

- Computer Vision: Interprets camera images (pixels) to recognize objects, track motion.

- Machine Learning: Enables continuous improvement through pattern recognition.

- Reinforcement Learning: Teaches autonomous systems optimal behaviors through trial and error in a simulated environment.

- Agentic Reasoning: Allows AI agents to perform multi-step planning and coordination. Agent break a goal into sub-tasks, such as deciding which tool to use and then coordinating the robot's movement to pick it up.

Embedded Systems: Processing at the Edge

Physical AI requires low latency, meaning data must be processed locally on edge devices. And embedded systems enable real-time processing at the edge, so that the machine can react instantly to its surroundings.

- Edge AI (GPUs, FPGAs, embedded CPUs): Local processing that avoids the delay of sending data to the cloud.

- Real-time Operating Systems (RTOS): Ensure that code execution is predictable and fast.

- Communication Protocols: Allow different physical systems to coordinate with each other.

Governance Systems: Ensuring Safety

Because real world risks are high, governance systems ensure safety, compliance, and control over the autonomous system.

- Observability Platforms: Monitor the behavior of physical ai models in real time.

- Policy Engines: Enforce strict rules on where and how an autonomous machine can operate.

- Audit Systems: Record every decision for accountability and safety reviews.

- Safety Controls: These include manual and automated emergency stops (kill switches) and human-in-the-loop protocols to ensure humans can intervene when necessary.

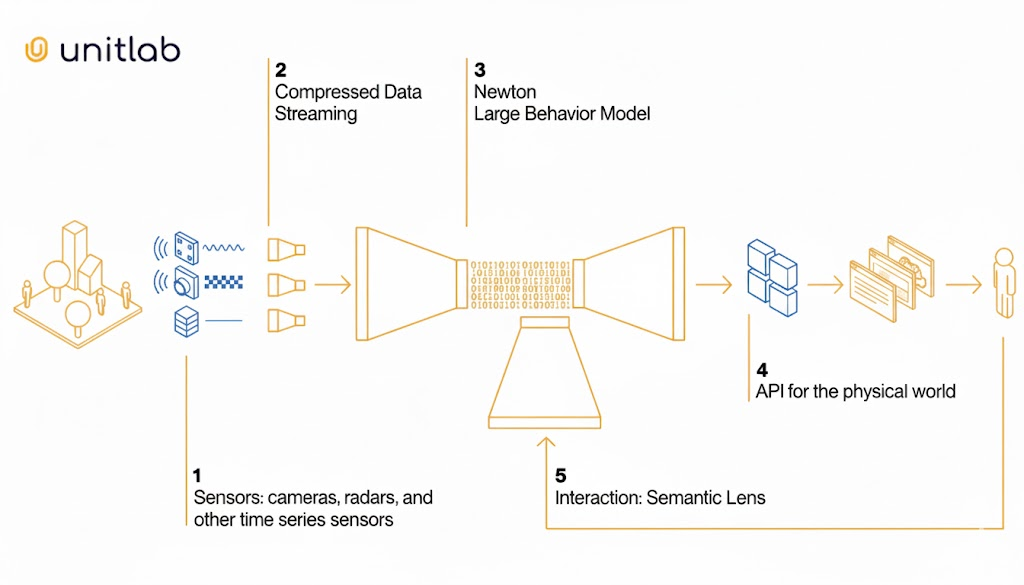

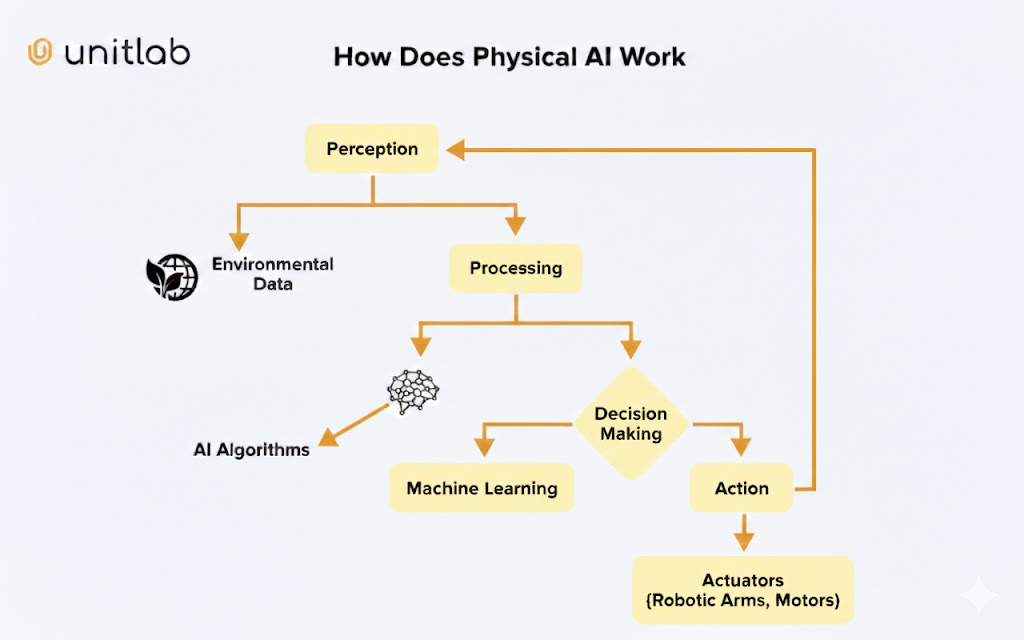

How Do Perception Stack and Components Work Together in Physical AI?

The perception system operates as a closed-loop cycle, with hardware and software working together to maintain physical autonomy.

The loop begins with perception, the sensors (cameras and LiDAR) capture a 3D point cloud and high-resolution images of the package. That data is immediately processed by AI algorithms to decide what to do.

The algorithms perform spatial reasoning to determine the object's exact coordinates and orientation (6DoF pose).

After it the reasoning engine sends commands to the actuators.

The robotic arm's motors move the gripper toward the object while the governance system's policy engine monitors the torque and speed to ensure it does not hit any obstacles or exert too much force.

If the sensors detect a sudden change, such as a person stepping nearby, the safety controls trigger an immediate pause or path correction.

This closed-loop cycle means that actions generate new data, which the system immediately uses to adjust its next move and create a stable and adaptive interaction with the real world.

What Data Is Required for Physical AI?

Building robust physical AI models requires diverse real-world sensor data that captures the variety of real-world situations the system will encounter.

Training data must include images, video, depth maps, and motion data collected in different lighting conditions, weather scenarios, and operational contexts.

Multi-Modal Sensor Data

Physical AI systems rely on synchronized streams of data from various sensors.

For a self-driving car or an autonomous mobile robot, this includes video from multiple cameras, 3D point clouds from LiDAR, and sparse velocity signals from radar.

Also, motion data from IMUs (Inertial Measurement Units) and joint encoders provide proprioception, which is the machine's internal sense of its own body position.

But real world data collection is difficult. As a single autonomous vehicle might need tens of thousands of hours of driving footage to encounter edge cases like construction zones, emergency vehicles, and pedestrians in unusual poses.

Synthetic data from digital twins simulations addresses these limitations by generating training data at scale without real-world risks.

Synthetic Data and Digital Twins

Because collecting diverse real world data is expensive and often dangerous like training a car to handle a crash, synthetic data generation is a critical tool.

Digital twins create a virtual space that perfectly replicates the physical world. These simulations use physics-based engines to model how objects move, fall, and react to forces.

Platforms like NVIDIA Omniverse and Isaac Sim create physically accurate simulated environments. It allows for repeated reinforcement learning in a simulated environment where the model can fail millions of times without breaking any hardware.

NVIDIA also provides a physical AI dataset. It includes 15 terabytes of data, more than 320,000 trajectories for robotics training, plus 1,000 Universal Scene Description assets for simulation.

Spatial Relationships and Physics-Awareness

A physical AI model must understand spatial relationships and the physical nature of objects to act in the real world. So the data must include labels for depth, orientation, and material properties.

A physics-aware dataset helps the model predict outcomes such as how much a box will slide when pushed before the action is taken. These capabilities let physical AI systems handle complex, unscripted tasks that require more than just simple pattern recognition.

The Role of Data Annotation in Physical AI

The utility of a dataset for physical AI is determined by the quality of its labels. The data collected must be labeled and formatted so that the AI can learn effectively. Key considerations include:

- Precision and Consistency: Sensor data (images, LiDAR scans, depth maps) need accurate labels. For vision, this might include bounding boxes, segmentation masks, or keypoints on objects. In a physical AI context, this often extends to labeling the 3D position or pose of objects, calibration info, and correlations between modalities (an object’s appearance in video and its signature in a radar scan).

- Multimodal Alignment: Physical AI typically uses multiple sensor types at once and data annotation tools must align these modalities. For instance, a scene might have synchronized camera and LiDAR frames. The annotation also ensures that a label applied in the camera view corresponds to the correct LiDAR cluster as well.

- Quality control: Data annotation errors can lead to failures in the physical AI system. Rigorous QA processes, inter-annotator checks, and automated consistency checks are important.

- Scalability: Physical AI projects often need large volumes of data. Automating parts of the annotation process (using synthetic data with automatically generated labels or semi-automated labeling tools) can speed up development.

Labeling data for physical AI models is challenging due to their multimodality and requires specialized annotation tools that can handle massive datasets efficiently.

Modern annotation platforms like Unitlab AI support integrated dataset creation and data annotation tailored to physical AI needs.

Unitlab AI data annotation suite supports image, video, and audio annotation, plus multimodal labeling capabilities (upcoming). Teams can use Unitlab AI to label objects in camera frames and manage quality control in one place.

Failure Modes and Technical Challenges in Physical AI

Operating in the physical world introduces challenges that do not exist in digital environments, including:

- Physics Constraints: Physical systems are limited by gravity, friction, and wear-and-tear. An AI model might plan a move that the hardware physically cannot execute.

- The Sim-to-Real Gap: Models trained solely in a simulated environment often fail in the real world due to unpredictable lighting or surface textures that were missing in simulation.

- Data Collection Costs: Moving a physical robot to collect data is slower and more expensive than scraping data from the web.

- Latency and Real-Time Feedback: Even a millisecond of delay in decision-making can lead to a collision in autonomous vehicles.

Physical AI Use Cases

Physical AI is being deployed across several high-impact industries, where the combination of sensing, thinking, and acting delivers immediate ROI. Key use cases include:

Robotics in Warehouses and Factories

Physical AI in robotics increases throughput, reduces errors, and allows machines to handle unstructured, changing environments.

Autonomous Mobile Robots (AMRs) navigate complex warehouse floors, avoiding obstacles and collaborating with humans. They use cameras and LiDAR sensors to plan routes for picking up and placing items.

In manufacturing, vision-guided robot arms adapt their grip and positioning to different parts on an assembly line. For example, a sorting robot might change its grip if a part is slightly misaligned.

Companies like Siemens use adaptive robots that learn from sensor feedback to optimize production tasks. For example, their SIMATIC Robot Pick AI Pro calculates grasp poses in milliseconds for mixed shapes, while other AI-driven robots at the Electronics Factory Erlangen perform delicate "tactile mounting" of tiny electronic components with human-like dexterity.

Autonomous Vehicles

Self-driving cars and trucks rely on physical AI to perceive roads and make real-time driving decisions. These vehicles fuse data from cameras, radar, GPS, and LiDAR to understand their surroundings.

Then, advanced AI models (like the Alpamayo family of open-source models) decide when to brake, accelerate, or steer.

For instance, an autonomous car can use vision-language-action models to interpret a stop sign or predict a pedestrian’s trajectory. The results are vehicles that can navigate highways and city streets safely without human drivers.

For example, Kodiak Robotics applies Physical AI to long-haul trucking, allowing massive semi-trucks to maintain lane safety and respond to road hazards at high speeds across thousands of miles of highway.

Logistics and Supply Chain

Physical AI reduces manual labor, speeds up fulfillment, and can operate 24/7 with minimal human intervention.

In logistics, physical AI automates repetitive tasks such as picking, packing, and sorting. Automated guided vehicles (AGVs) use sensor data to carry items across distribution centers.

Manufacturing and Inspection

Beyond assembly, physical AI improves quality control. Cameras combined with ML inspect parts for defects on production lines.

For instance, an AI vision system can spot a hairline crack or misprinted barcode that a human might miss.

Robots can then take corrective actions, like removing a flawed item from the line. This real-time feedback loop ensures high product quality and reduces waste.

Healthcare and Assistance

Medical robotics is an emerging area of physical AI. Surgical robots, for example, learn delicate procedures (like suturing) via reinforcement learning, enabling higher precision and repeatability.

Service robots in hospitals can autonomously deliver medications (TUG robots) or assist patients. These systems often work alongside people, highlighting the need for safety and responsive control.

The Future of Physical AI

In the near term (next 1–3 years), we expect major advances in edge AI and standardization. Edge computing hardware (smaller GPUs, neuromorphic chips) will enable even faster on-device inference, making autonomous systems more responsive.

Standard tools and libraries for robotics (like Universal Scene Description for 3D environments) will streamline development.

In industry, more manufacturing plants and warehouses will adopt physical AI for tasks like predictive maintenance and flexible assembly.

Within 3–7 years (medium term), fully autonomous facilities and interconnected, self-optimizing supply chains will likely emerge, supported by new regulatory frameworks for public deployment.

In the long term (beyond 7 years), physical AI could enable self-optimizing infrastructure and deep human-AI collaboration. Human-robot interaction will become more natural. Physical AI agents could work side by side with people, understanding subtle cues and cooperating on complex tasks.

Conclusion

Physical AI moves intelligence from screens to the objects and environments that surround us. Physical AI systems are overcoming the limits of traditional automation by mastering the sense-think-act-govern loop.

But, the path to deployment requires solving difficult technical challenges, most notably the sim-to-real gap and the need for high-quality, perfectly synchronized multimodal datasets.

As these systems become more prevalent in our factories, warehouses, and cities, the role of data management and precise annotation will become more important over time.

References

- The Ultimate Guide to Multimodal AI [Technical Explanation & Use Cases] - Unitlab AI

- Physical AI: Powering the New Age of Industrial Operations - World Economic Forum

- Foundation Model Driven Robotics: A Comprehensive Review - ArXiv

- AI goes physical: Navigating the convergence of AI and robotics - Deloitte Insights

FAQs

Q 1. What is Physical AI and how does it differ from traditional AI?

Physical AI represents the integration of artificial intelligence into physical systems, enabling them to interact with the physical world. Unlike traditional AI, which operates in a purely digital world to process data, a physical AI model understands physical behavior and physical rules. While traditional AI focuses on digital outputs, physical AI work involves autonomous systems performing repetitive tasks or navigating complex environments in the real world.

Q 2. How do physical AI systems perceive the real world?

To build physical AI, engineers use a perception stack that collects real world sensor data. And it lets the AI system recognize objects and map spatial relationships in real world environments. These intelligent systems then use computer vision and machine learning to interpret the surroundings, providing the necessary input for real time decision making.

Q 3. What role does synthetic data play in training physical AI?

Training physical AI is difficult because real world data is expensive and slow to collect. Synthetic data generation in a simulated environment or virtual space allows developers to create massive amounts of training data safely. An AI model can practice in simulated environments that mirror real world dynamics by using synthetic data before being deployed to autonomous machines in the field.

Q 4. What is the role of reinforcement learning in autonomous systems?

Reinforcement learning is a key part of how physical AI models learn to act. Through repeated reinforcement learning, autonomous mobile robots and industrial robots learn optimal actions by receiving rewards for successful outcomes in a simulated environment. This process helps mobile robots develop the fine motor skills needed for autonomous operations with minimal human intervention.

Q 5. What are the challenges of physical AI?

The sim-to-real gap is a major hurdle where AI models that excel in a simulated environment fail when facing real world risks. Physical AI requires solving issues like latency in edge devices, varying lighting in physical environments, and the high cost of data collection. Additionally, ensuring safety controls and human oversight is critical when ai powered systems operate near people.

Q 6. What are examples of physical AI?

Real world applications of physical AI systems are found in self driving cars, autonomous vehicles, and warehouse logistics. Industrial robots use physical AI for quality inspection, while autonomous mobile robots handle material moving in factories. These enabling systems are also powering smart spaces that use video analytics to continuously improve operational efficiency.