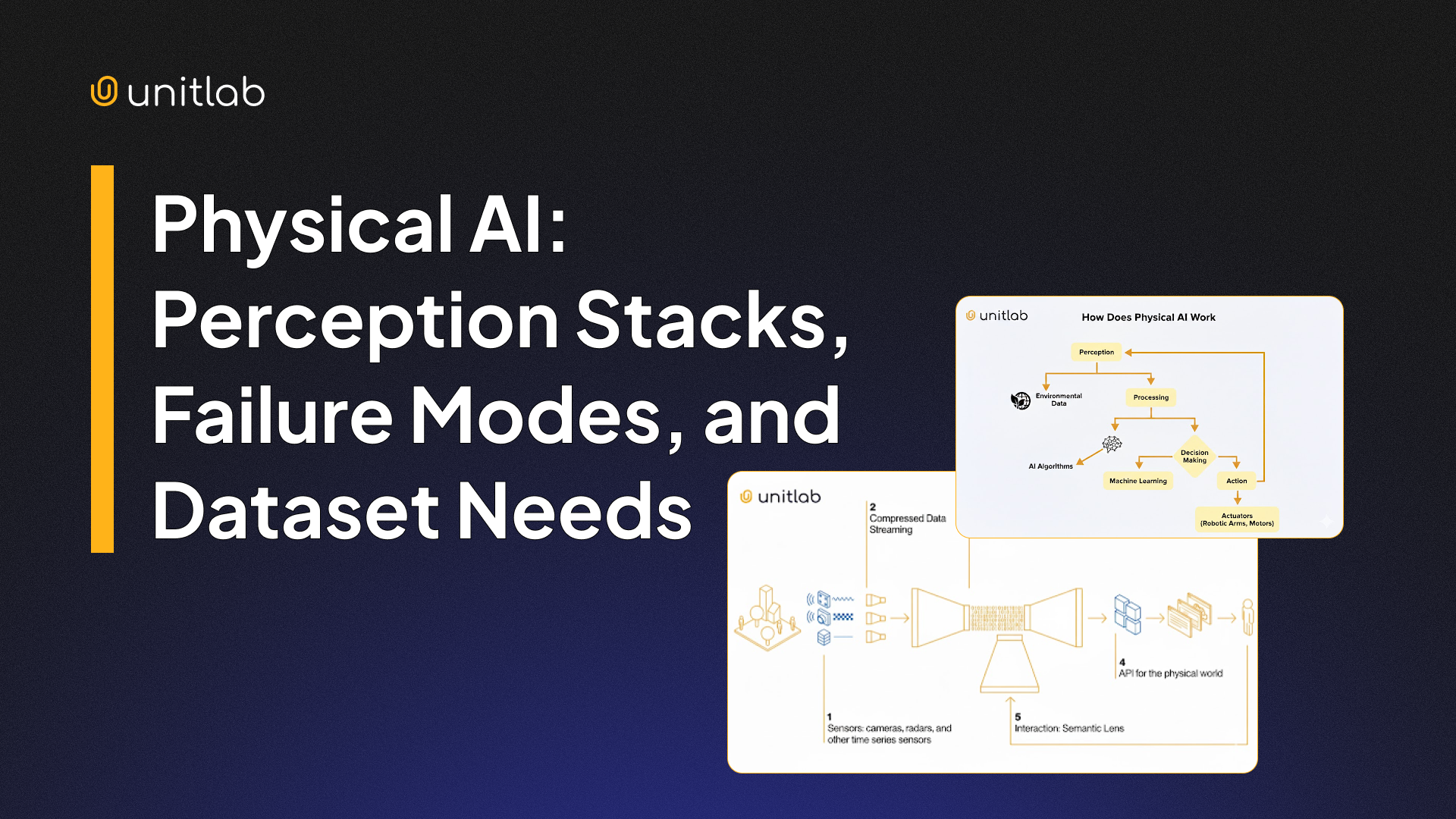

Physical AI: Perception Stacks, Failure Modes, and Dataset Needs

Physical AI refers to AI-powered systems that operate in the real, physical world. These systems integrate sensors like cameras and LiDAR, with machine learning so they can perceive their surroundings and take actions in real time.

![Multimodal AI in Robotics [+ Examples]](/content/images/size/w2000/2026/01/Robotics--12-.png)