As discussed in our last post, we produce extensive educational content and practical tips on our blog. We have written in detail about different image annotation techniques: an essential factor when creating an annotated dataset and selecting a data annotation platform to streamline the data labeling process.

A Comprehensive Guide to Image Annotation Types and Their Applications | Unitlab Annotate

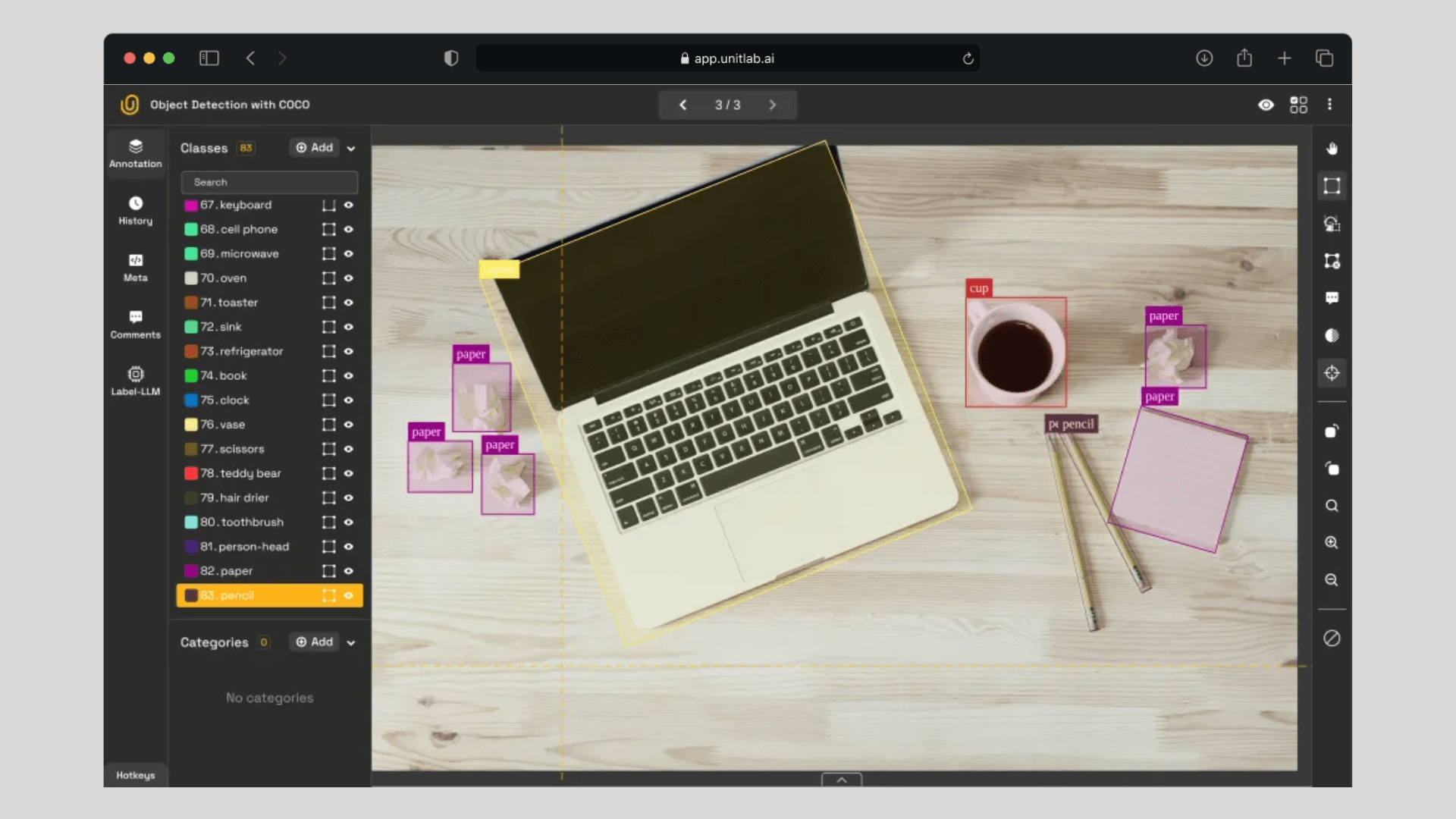

To provide an overview of the most common image data annotation methods, we wrote a post discussing each type. Here, we will delve into bounding boxes, the most frequent and fundamental form of image labeling that many people have seen in examples and demo videos.

They have a variety of computer vision applications. We will demonstrate how they work through a sample project, highlighting how an image labeling tool can facilitate the process.

By the end of this post, you will understand:

- What bounding boxes are

- How they operate

- The domains and applications where they are used

- Methods for annotating bounding boxes

- Challenges and best practices for bounding box annotation

What are Bounding Boxes?

Essentially, a bounding box is a rectangular border that fully encloses an object of A bounding box is essentially a rectangular boundary that fully encloses an object of interest in an image. For instance, if you have an image containing a car and need to mark it, you would draw a rectangle around the car and label it as car or vehicle.

This rectangle is known as a bounding box. When multiple cars or objects appear, you create separate rectangles for each instance, capturing their positions and identifying them accordingly.

Car Auto Detection with Bounding Boxes | Unitlab Annotate

Bounding boxes are straightforward and commonly used in data annotation pipelines. They are also widely employed in publicly available datasets, including COCO and PASCAL VOC.

Each bounding box can include metadata, such as its class name or a confidence score, particularly when using data auto-annotation tools. This process helps record the location of any object of interest, along with its label and other attributes.

How Bounding Boxes Work

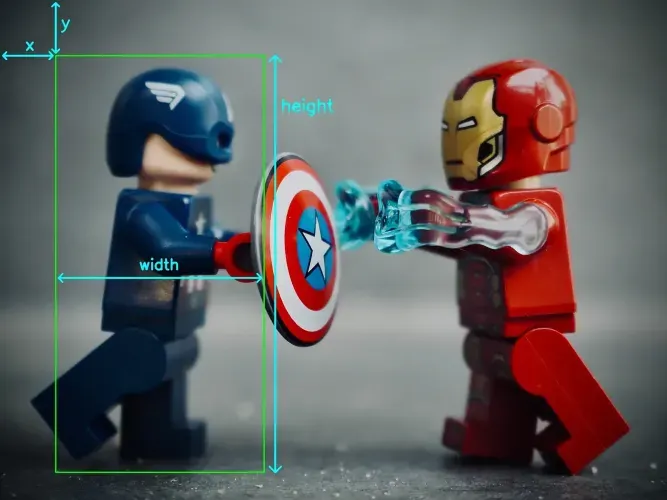

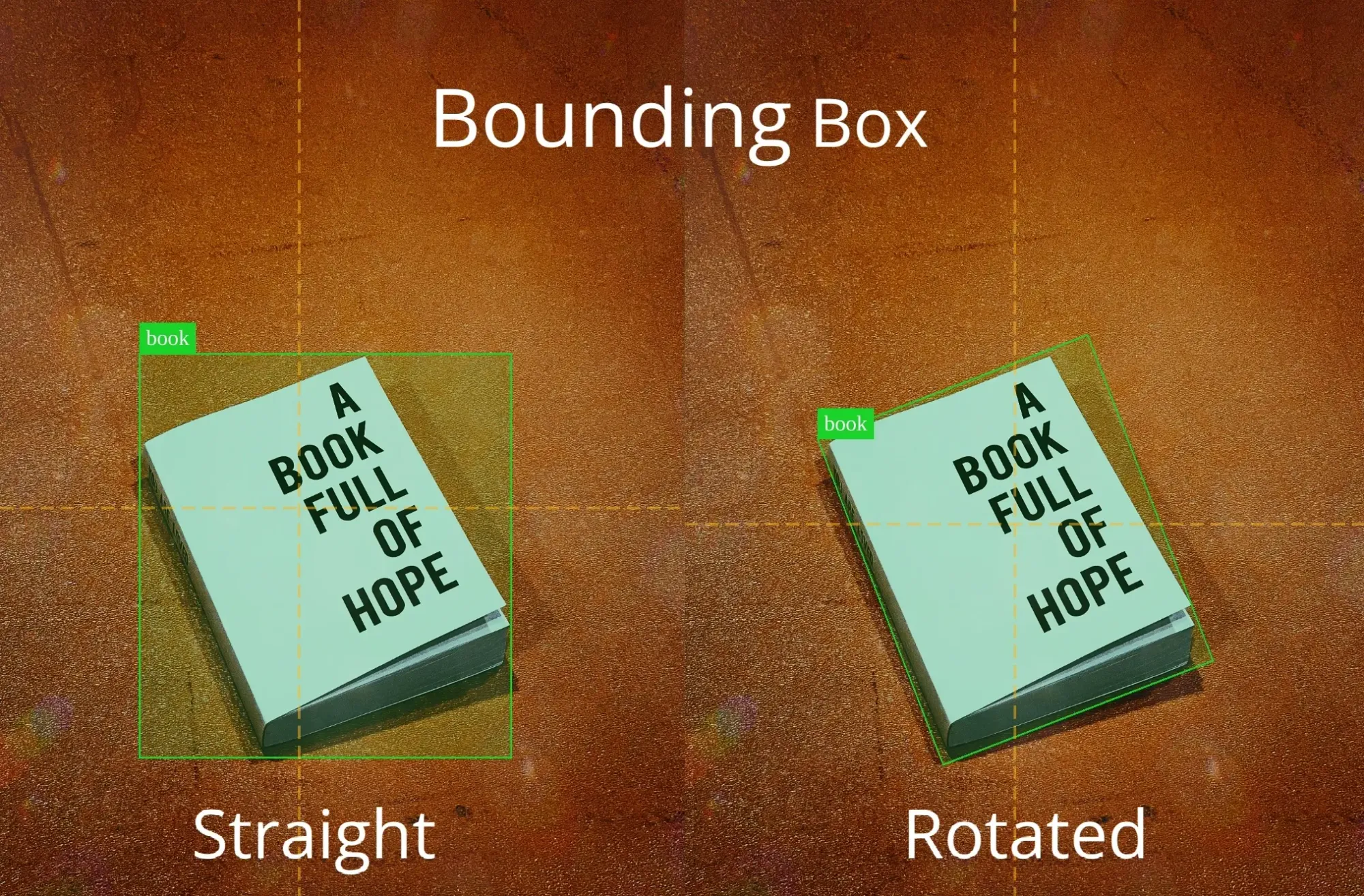

As indicated, bounding boxes involve placing rectangles around objects of interest, either through manual data labeling or image auto labeling tools. Depending on the annotation model, bounding boxes can be defined in multiple ways:

- Using the top-left corner’s coordinates, along with the rectangle’s width and height (COCO).

- Using the top-left corner’s and bottom-right corner’s coordinates (PASCAL VOC).

- Using the rectangle’s center coordinates, along with its width and height (YOLO).

These methods assume that rectangles are axis-aligned (i.e., not rotated). If a bounding box is rotated, each of the four corners must be defined by its own (x, y) coordinates. Regardless of the method, these coordinates enable AI/ML models to localize the object accurately.

Domains of Bounding Boxes

Bounding boxes play a vital role in image annotation and appear in a variety of contexts:

- Object Detection: Bounding boxes are the predominant tool for object detection tasks.

- Image Segmentation: They often serve as an initial step for more detailed segmentation work.

- Image Captioning: To caption an image, bounding boxes are typically used to highlight the relevant objects.

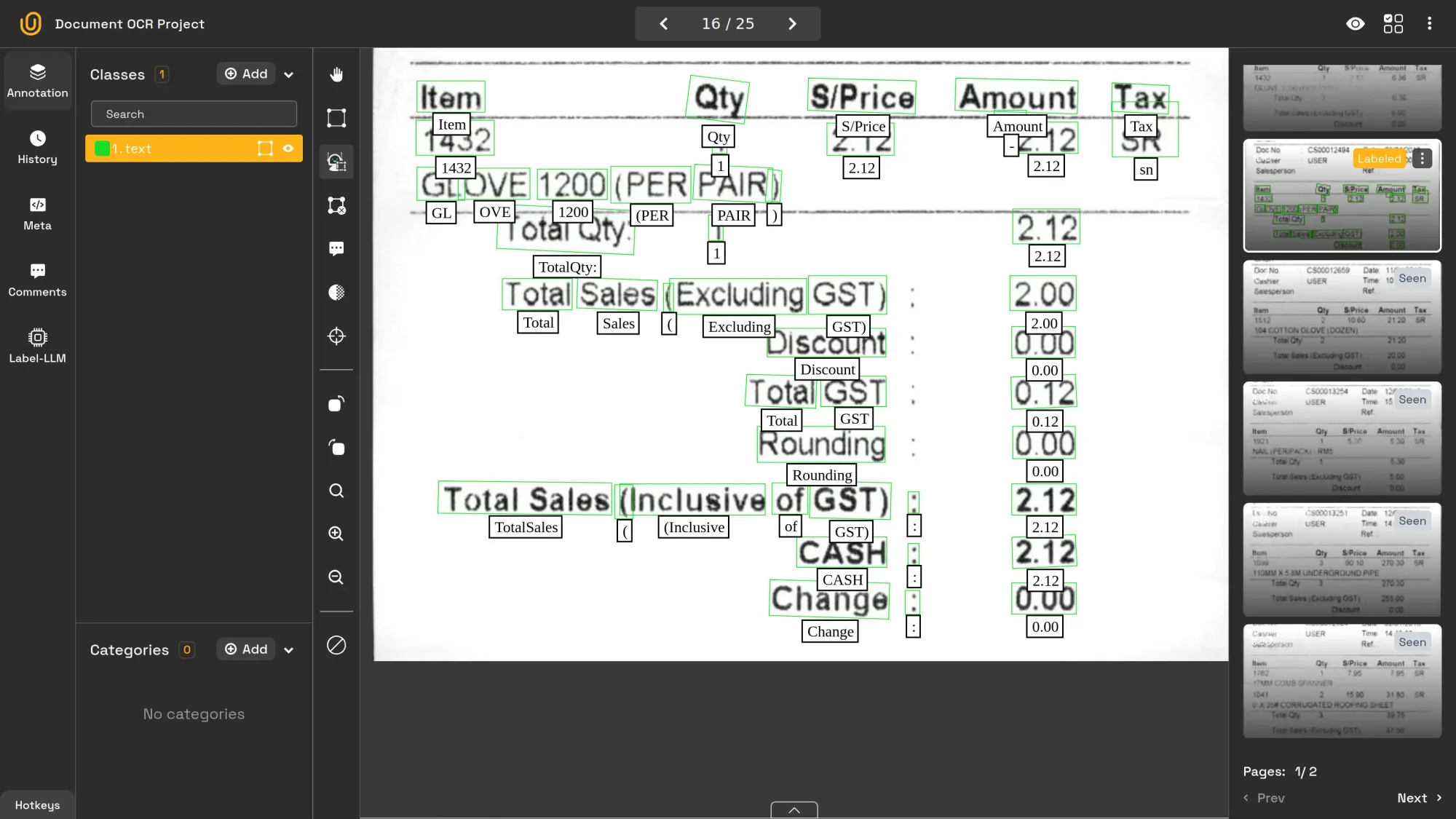

- Image OCR: When converting images to text, bounding boxes first locate text regions before the text is extracted.

Use Cases for Bounding Boxes

Due to their versatility, bounding boxes are applied in a wide range of fields:

- Autonomous Vehicles: Self-driving cars must recognize pedestrians, vehicles, and traffic signs to navigate safely in real time.

- Retail: Computer vision models deployed on cameras can track inventory, shelf organization, and customer behaviors through object detection.

- Surveillance: Street and building security systems monitor objects and individuals for safety and analytical purposes.

- Scanning: Bounding boxes can locate text in images and videos, allowing subsequent processes to handle text extraction.

Most computer vision applications in the real world rely on bounding boxes thanks to their efficiency, speed, and overall adaptability.

Demo Project

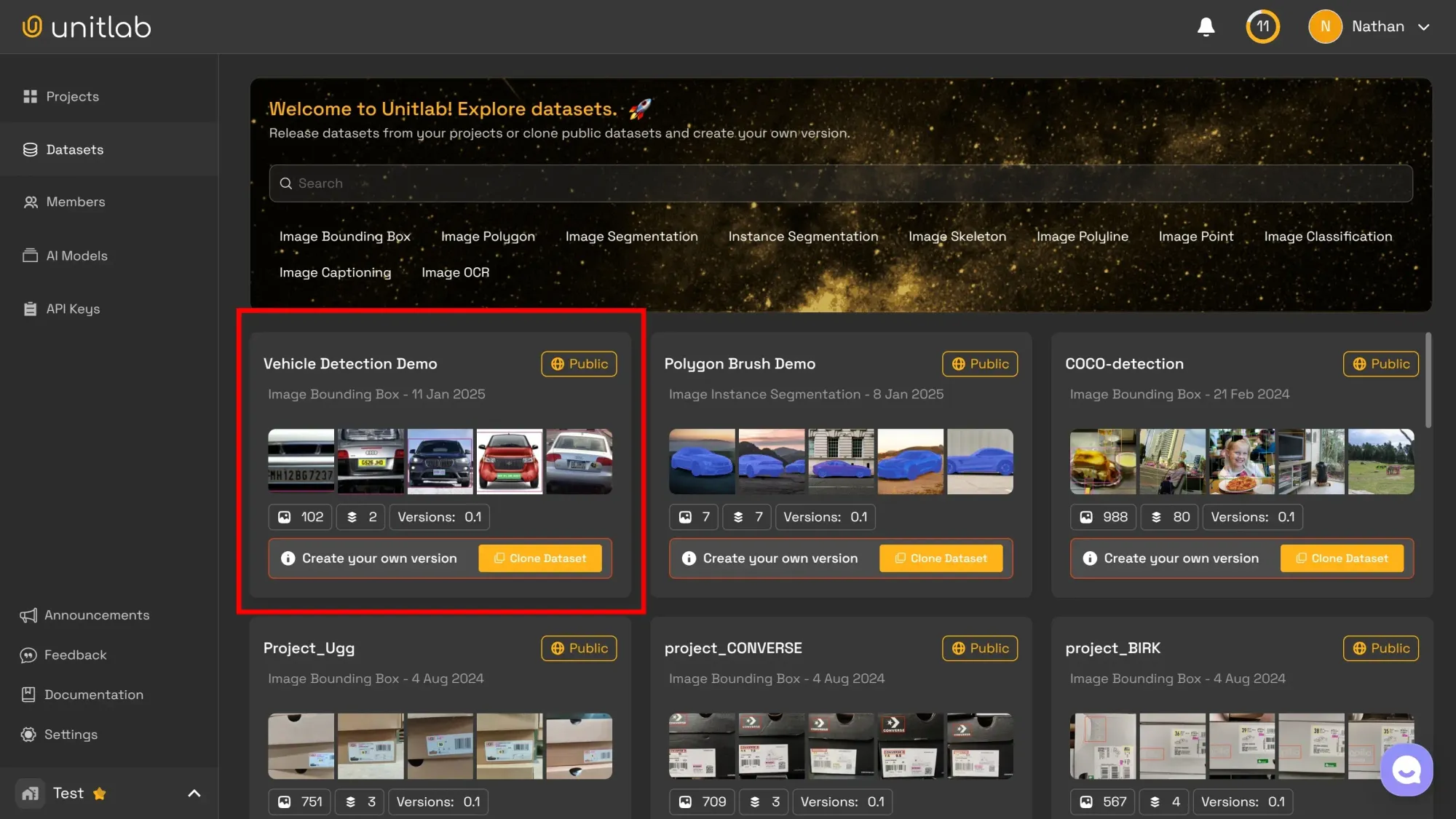

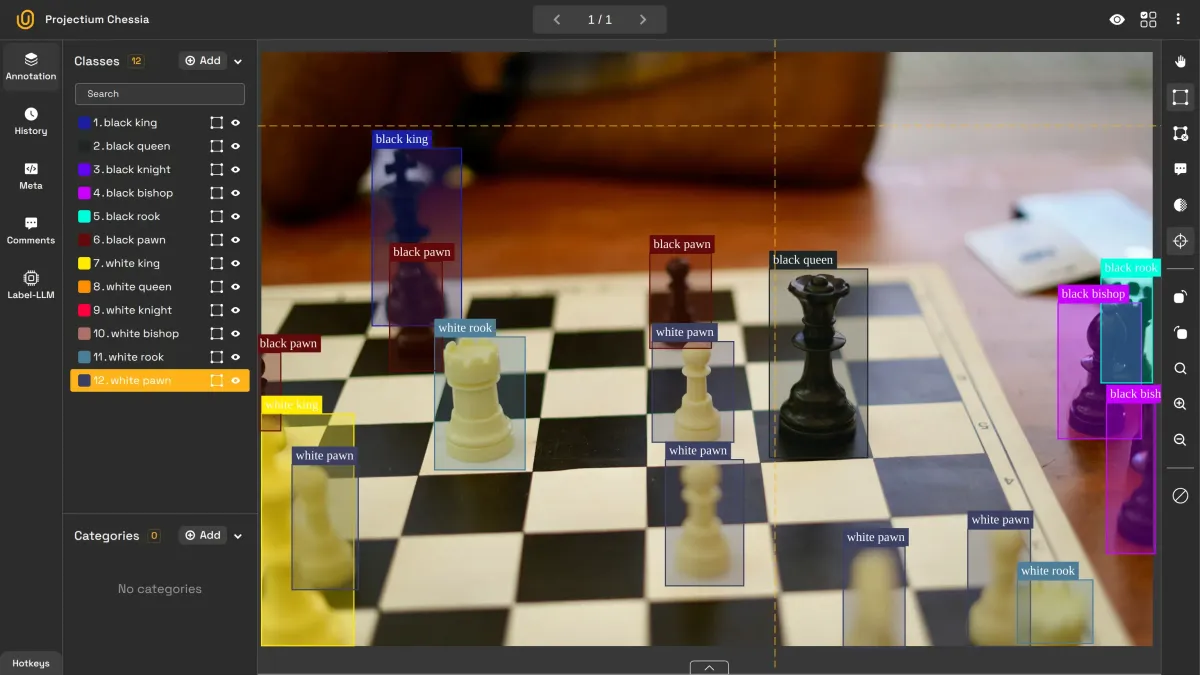

Before we explain bounding boxes in greater detail, let us walk through a sample project to label images with bounding boxes using Unitlab Annotate, a collaborative and AI-powered data annotation solution.

You can set up a free account and follow the steps below. We encourage you to experiment with this demo to gain hands-on experience with bounding boxes.

In our demonstration, we will build a vehicle detection system that identifies vehicles and license plates, then annotates them with bounding boxes.

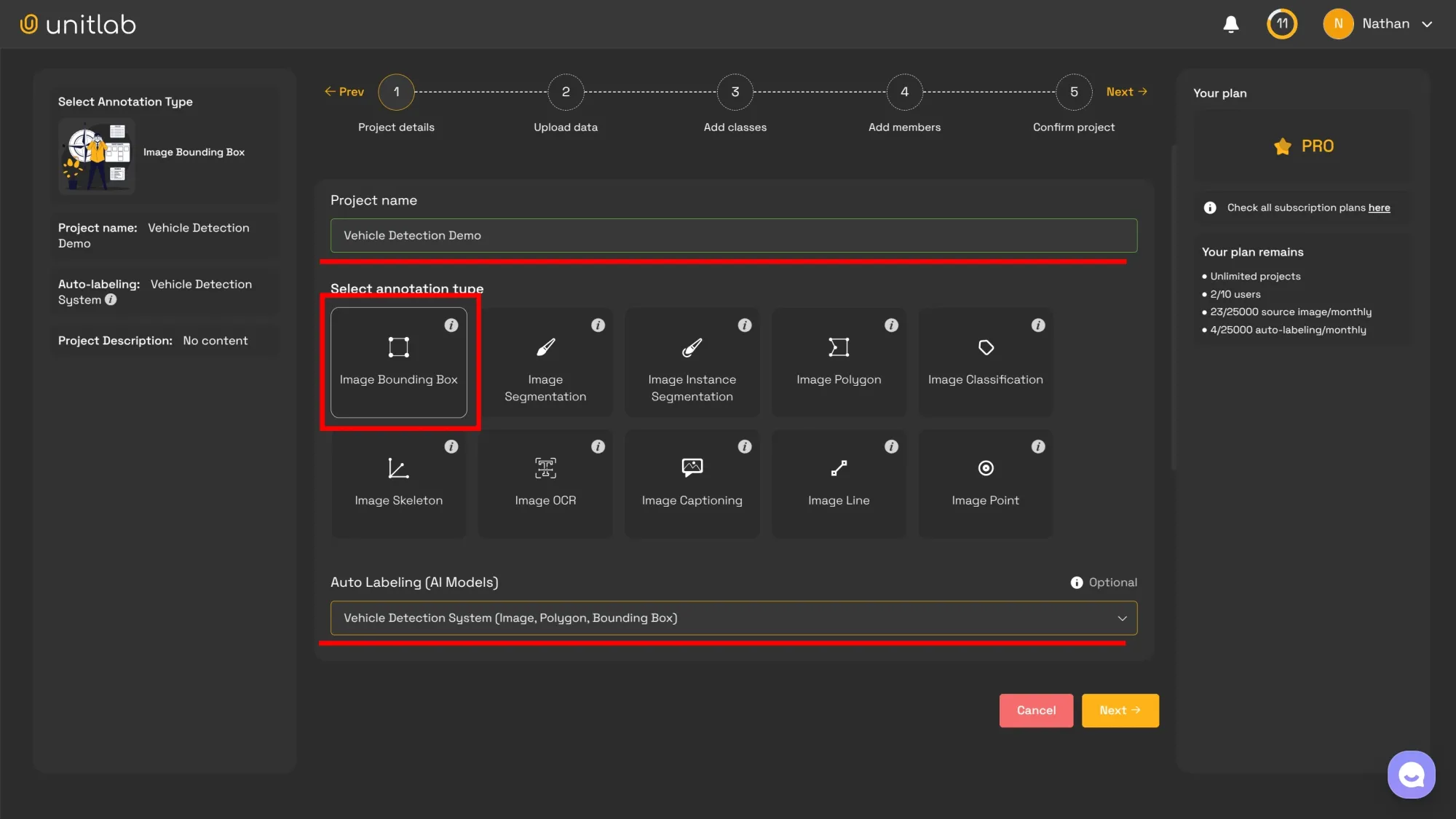

Step 1: Project Setup

After creating your account, start by creating a new project in Unitlab Annotate. Give it a descriptive name, select Bounding Box as the annotation format, and choose Vehicle Detection System as your AI model to auto-label images using advanced auto labeling tools.

You may upload your own vehicle images or download our public dataset to follow along.

For more information on project creation within Unitlab Annotate, please refer to this post:

Project Creation | Unitlab Annotate

Step 2: Data Annotation

With your project created, you can begin annotating images with bounding boxes. Several methods are available:

- Manual Annotation: A human annotator draws bounding boxes, providing high-quality results but at a slower pace.

- Fully Automated Annotation: AI performs the labeling, allowing rapid processing at the potential cost of accuracy.

- Hybrid Human-in-the-Loop: AI initially labels the data, and a human annotator subsequently checks and refines the results, ensuring balanced speed and quality. This approach often achieves consistent, high-quality image labeling suitable for large ML datasets.

Batch Auto-annotation for Vehicle Detection | Unitlab Annotate

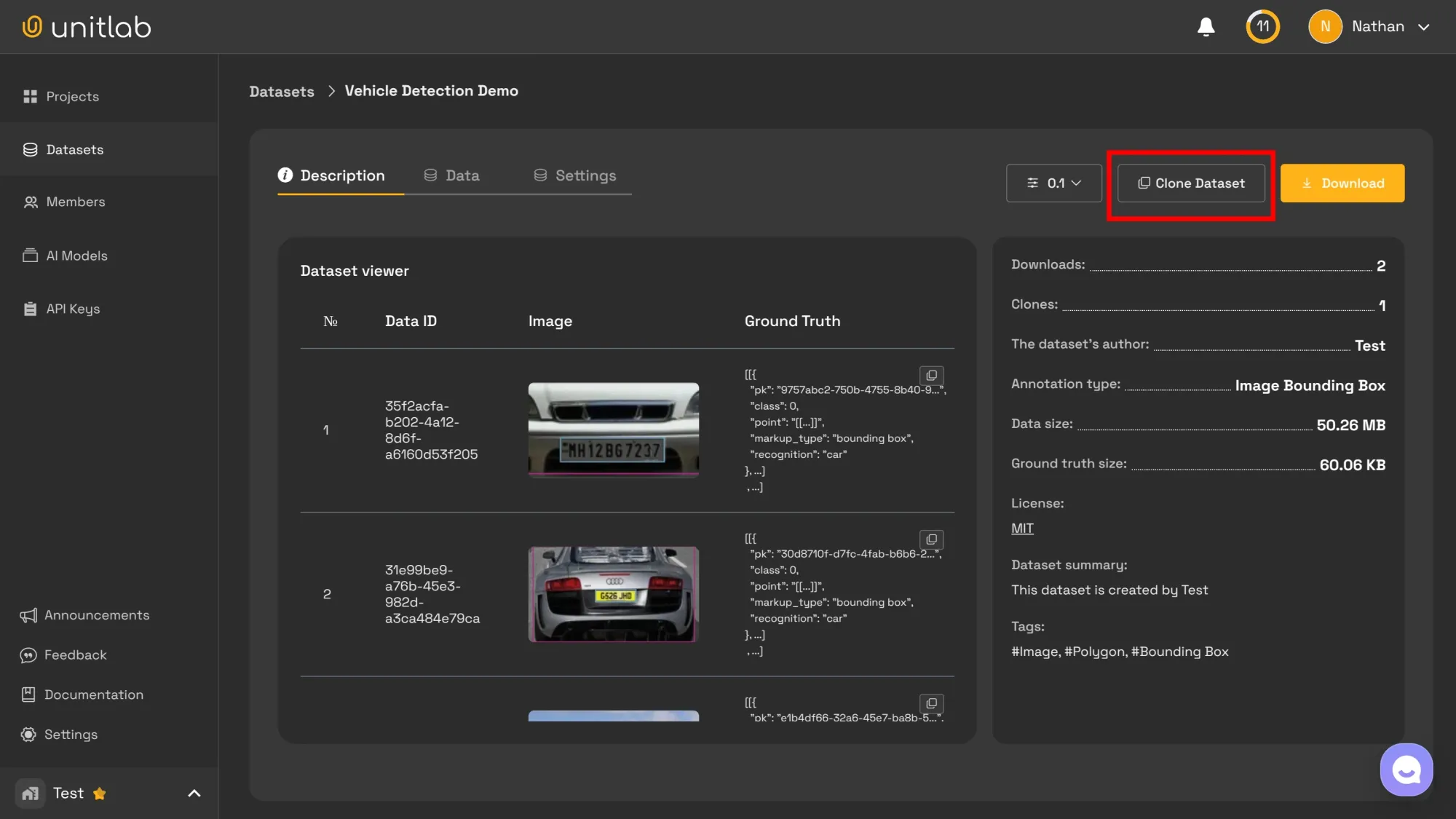

For this tutorial, we will focus on data auto labeling for speed, but note that our public dataset uses the hybrid method, which you can clone to explore or modify.

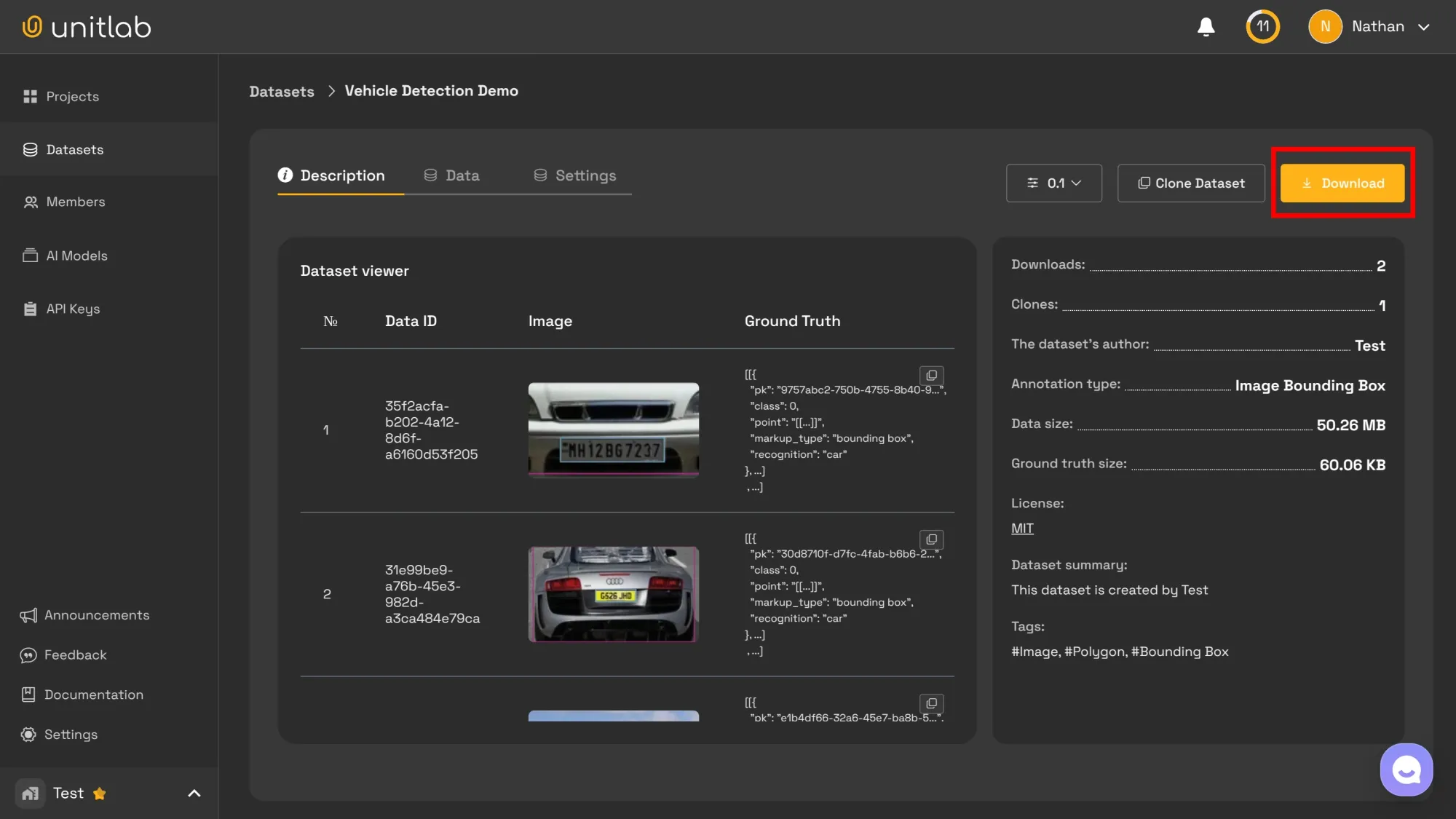

Step 3: Dataset Release

Once all images have been annotated, you can publish an initial version (0.1) of your dataset to train and evaluate AI models.

Proper dataset management and dataset version control are essential for keeping track of changes and managing your data effectively as your project evolves. Releasing versioned datasets ensures transparency when working with AI datasets or ML datasets in production.

As you collect and label additional data, you can release subsequent versions to improve and refine your models over time. This approach enables AI dataset management strategies that adapt and evolve alongside project requirements.

Challenges in Bounding Box Annotation

Despite their broad applicability, bounding boxes present certain challenges similar to other image annotation types:

- Overlapping Objects: When objects are close together, accurately defining each bounding box can be difficult.

- Occlusion: Real-world images frequently contain partially visible objects or reflections, which causes label inconsistencies.

- Subjectivity: Different annotators may interpret an object’s boundaries differently, resulting in variability.

- Precision vs. Speed: High-precision bounding boxes demand more time, which affect large-scale initiatives involving numerous annotators, introducing inconsistencies.

As usual, there are no solutions to these challenges; there are only trade-offs. While you may pursue maximum accuracy, this approach can also slow throughput and increase costs. The best strategy depends on the specific goals and resources of your project.

Tips for Effective Bounding Box Annotation

We previously shared 7 tips for accurate labeling, which also apply to bounding boxes:

7 Tips for Accurate Image Labeling

- Label Objects in Their Entirety: Overlapping objects should be labeled in their entirety to train models to detect them accurately, even when partially obscured.

- Label Occluded Objects: An object is occluded if it is partially blocked or kept out of view. Treat partially blocked objects as if they are fully visible to maintain consistency.

- Set clear guidelines: While human annotators excel at interpreting context, too much subjectivity undermines consistency. Comprehensive guidelines keep labeling precise and produce higher-quality data labeling results.

- Leverage auto-annotation: A balanced approach involves automated labeling followed by human review. This human-in-the-loop process accelerates image annotation while preserving quality and consistency in large-scale data labeling projects.

While bounding boxes come with specific challenges, they remain among the most robust and widely used annotation tools. Ultimately, selecting the best approach depends on your project requirements.

Conclusion

Bounding boxes represent the most common form of image annotation. They are quick to create and easy to interpret, with simple rectangular boundaries defined by coordinates and associated labels. Furthermore, bounding boxes provide a foundation for more advanced tasks, such as image segmentation, captioning, and OCR.

Their versatility makes them ideal for many real-world applications, including autonomous vehicles, inventory management, and scanning workflows. We encourage you to try our demonstration project to experience bounding box annotation firsthand, explore auto labeling tools, and learn how to produce high-quality data annotation.

Nevertheless, bounding boxes are not a universal solution. They have limitations and may not be appropriate for every scenario. As with most decisions in machine learning, context is key. Recognizing potential issues and choosing the best strategies to address them ultimately lies in your hands.

Explore More:

- Intro to Polygon Brush Annotation

- Image Point Annotation: The Ultimate Guide

- What is Computer Vision Anyway?

References

- James Gallagher (Oct 9, 2024). What is a Bounding Box? A Computer Vision Guide. Roboflow Blog: Source

- Rajdeep Singh (Jan 17, 2024). A Quick Reference for Bounding Boxes in Object Detection. Medium: Source