Data annotation is often the slowest step in building machine learning pipelines. With the Segment Anything Model (SAM), teams can cut hours of manual work by using automated labeling, segmentation datasets, and powerful mask generators that adapt to different model types.

Unitlab Annotate provides multiple auto-annotation tools, such as Magic Touch (also referred to as Segment Anything Model - SAM), Batch Auto-Annotation, and Crop Auto-Annotation. Let's explore the Magic Touch Auto-Annotation Tool in this blog post.

About Segment Anything Model (SAM)

The Segment Anything Model (SAM), open-sourced by Meta AI Research (FAIR) in April 2023, has attracted significant interest and popularity within the AI community because it is capable of generating high-quality object masks when provided with prompts such as points, boxes and text. This capability enables the creation of masks for every object in an image.

The SAM was trained on an extensive dataset that includes 11 million images and 1.1 billion masks, demonstrating strong capabilities in zero shot performance across various segmentation tasks.

The Architecture of SAM

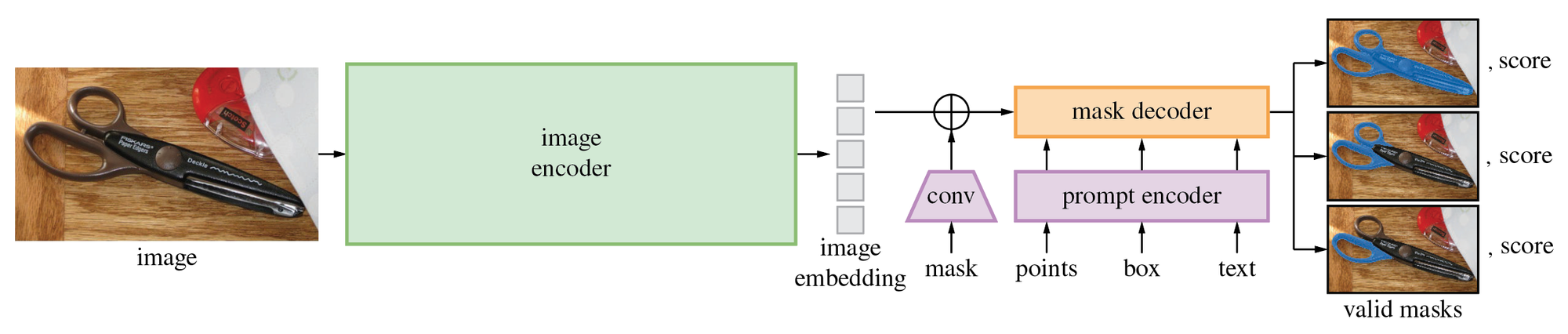

The SAM architecture consists of an image encoder (Vision Transformer - ViT) and a lightweight decoder network.

The SAM encoder transforms an image into image embeddings in a single forward pass. Meanwhile, the SAM light decoder produces masks for the image based on each user's prompts, with the number of generations equal to the number of prompts received. This insight suggests that this approach is perfectly suited for data annotation purposes.

The SAM Model in Data Annotation

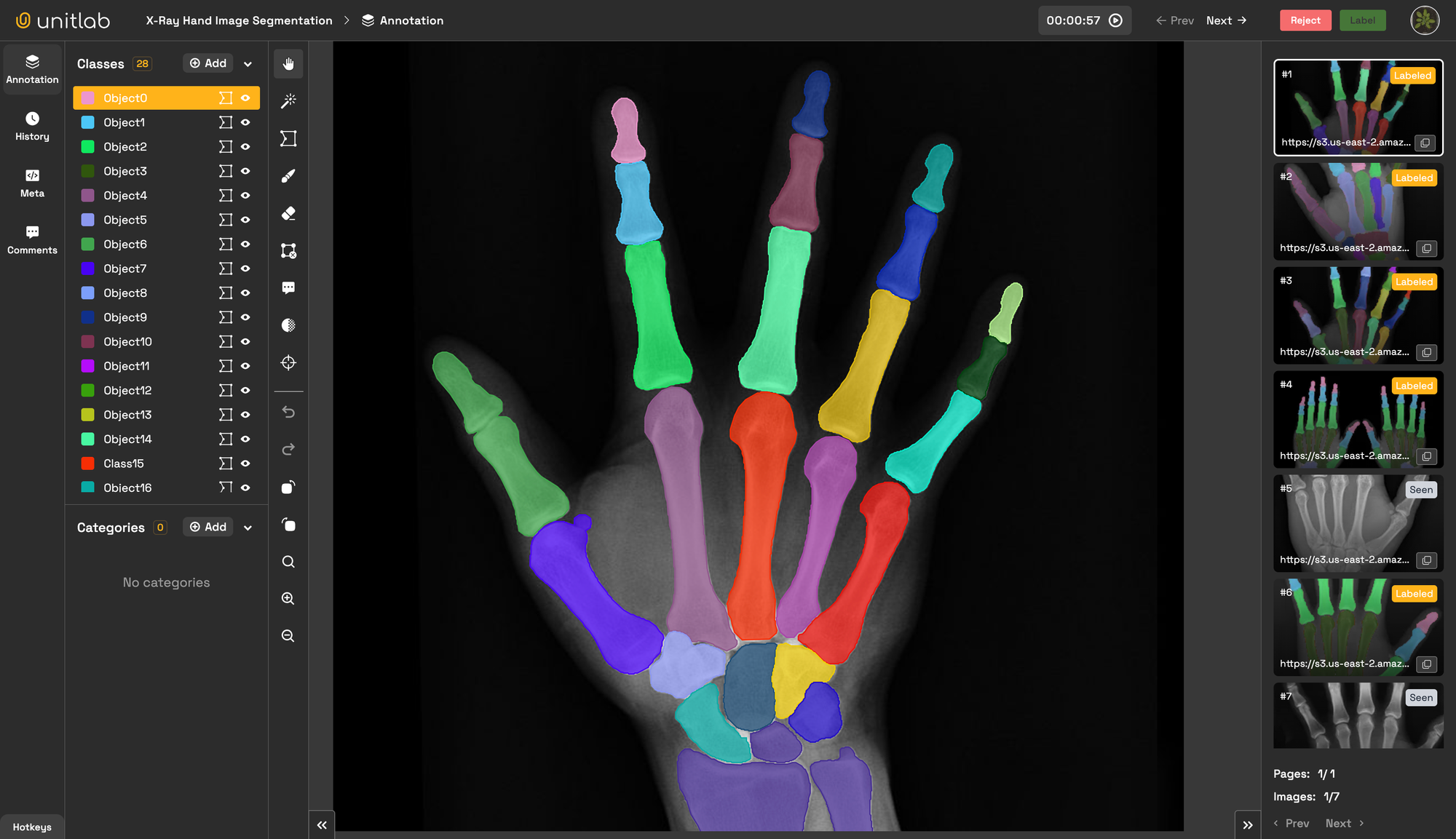

Automatic data annotation is crucial for collecting large-scale datasets in a short time with minimal cost. The SAM model enables us to collect data automatically with user prompts. Annotators simply click where they want to annotate, and the SAM decoder generates masks (annotations) in microseconds.

In Unitlab Annotate, the SAM decoder is deployed on the server, whereas the SAM light encoder runs in users' web browsers using ONNX Runtime Web. This provides the best user experience by enabling annotators to click on as many prompts as they want until they are confident that the data is perfectly annotated. We refer to the annotator's prompts (clicks) as 🪄 Magic Touch in Unitlab Annotate.

Use Cases of the Segment Anything Model for Data Annotation

The Segment Anything Model has many applications across different industries:

- Medical Imaging – Speeds up scan annotation, creating reliable training data for healthcare AI.

- Autonomous Driving – Labels pedestrians, vehicles, and signs to enrich segmentation datasets and improve model performance.

- Retail – Segments products on shelves to support checkout-free systems and smarter inventory tracking.

- Agriculture – Processes aerial imagery to label crops and pests, building stronger model types for yield prediction.

- Research – Used in academia via GitHub to test algorithms and benchmark datasets with SAM’s mask generator.

Magic Touch - SAM

Magic-Touch (SAM) is like a wizard in the world of auto-annotation, thanks to its Segment Anything (SAM) model. It's a game-changer that can annotate any object, no matter how complex, with amazing precision. You can find this superpower in Unitlab Annotate, ready to work its magic on both image segmentation tasks (including semantic, instance, and panoptic) and image polygon annotation tasks.

Key Features

- Perfect Annotation: Just click where you want to annotate, and Magic-Touch (SAM) will do the annotations for you, perfectly and accurately.

- Flexibility: You can annotate any data, any object, regardless of type and complexity.

How to use?

To use the 🪄 Magic-Touch auto-annotation tool in Unitlab Annotate, first, sign up at Unitlab Annotate, then create a workspace and a project by uploading your data. Get started with data annotation.

Let's view a demo

Learn how to annotate data using the Magic-Touch tool in Unitlab Annotate through the demo videos below.

Unitlab Annotate: Magic-Touch - Auto Annotate Tool

In this example, we've selected a hand X-ray image to showcase our Magic Touch Tool of Unitlab Annotate. Our objective is to demonstrate how effectively the SAM model can manage complex structures and fine details found in medical X-rays.

Conclusion

The SAM model is remarkable, offering significant advancements in the automation of data annotation. It assists annotators by saving time and enhancing efficiency in data annotation tasks. Unitlab Annotate has successfully integrated this model, making it available for free to all users of Unitlab Annotate. Start your data annotation journey with Unitlab Annotate today.

Unitlab is a collaborative and AI-powered data annotation platform that offers on-premises solutions and integrated labeling services. It automatically collects raw data and enables collaboration with human annotators to produce highly accurate labels for machine learning models.