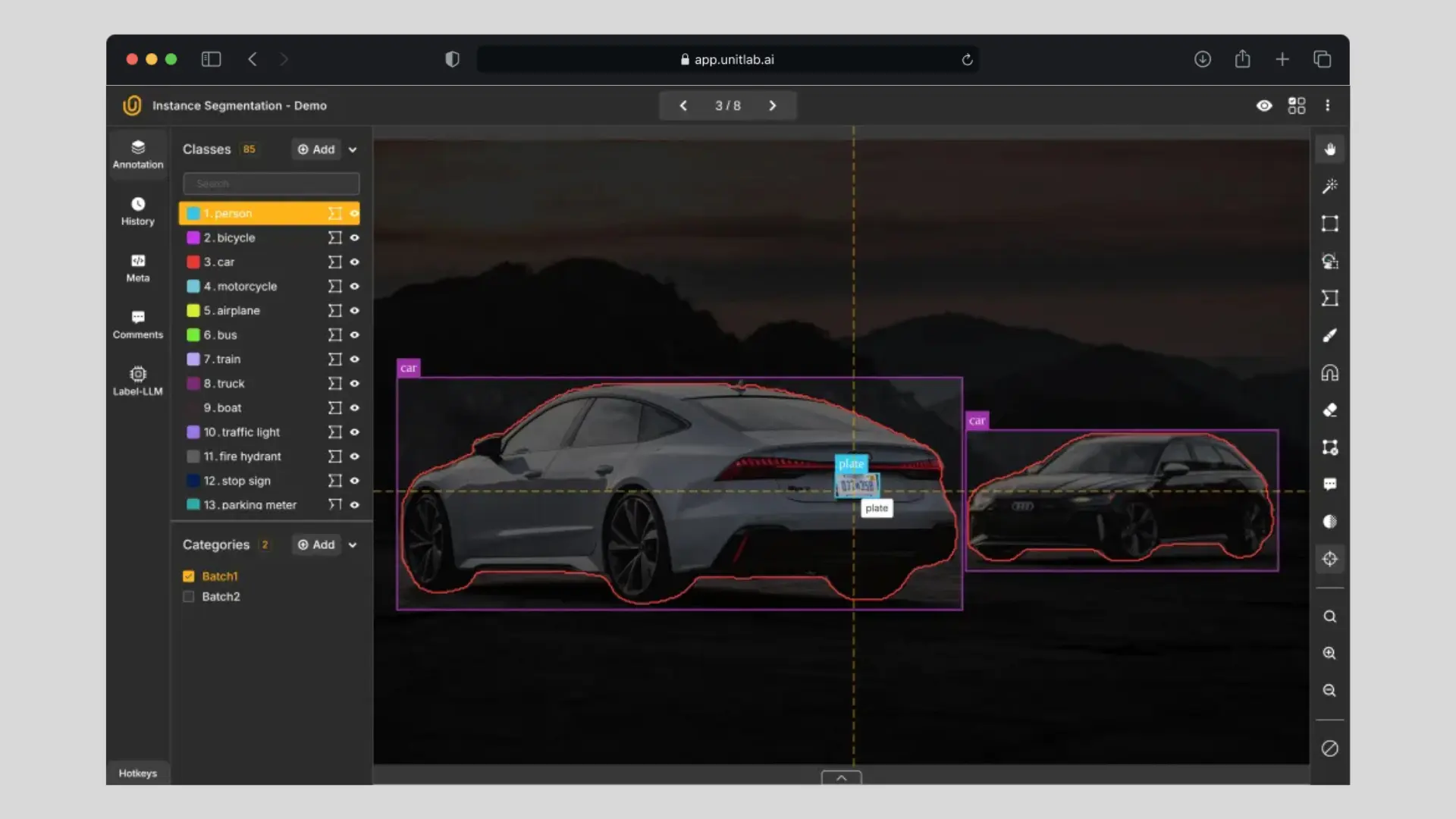

While annotating your source data, you can (and should) use AI-powered tools to speed up the labeling process. Most platforms offer built-in foundational models and let you integrate your own (BYO) models. With these, you can label your data much faster than doing it manually—and then review and fix the annotations for better accuracy and precision. This hybrid setup, known as “human-in-the-loop,” gives you the best of both worlds.

Hybrid Data Annotation | Unitlab Annotate

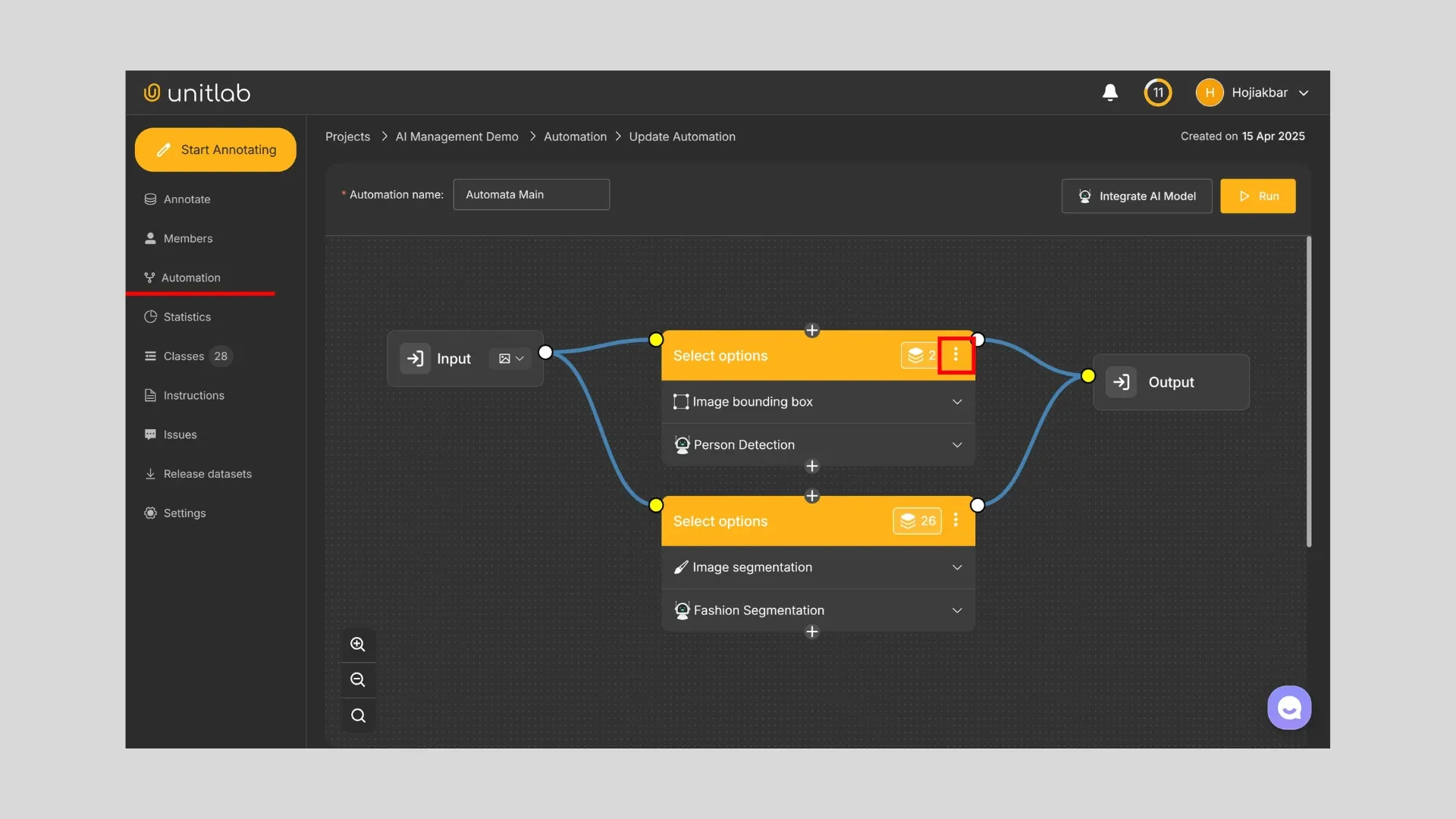

We at Unitlab AI have recently released a new powerful feature to take this even further: Automation Workflow. Before this, you could only use one AI model per project. Now, you can chain together several models into a workflow. That’s a big deal—because in real-world scenarios, your dataset often needs to be labeled using more than one type of model to capture all the complexity.

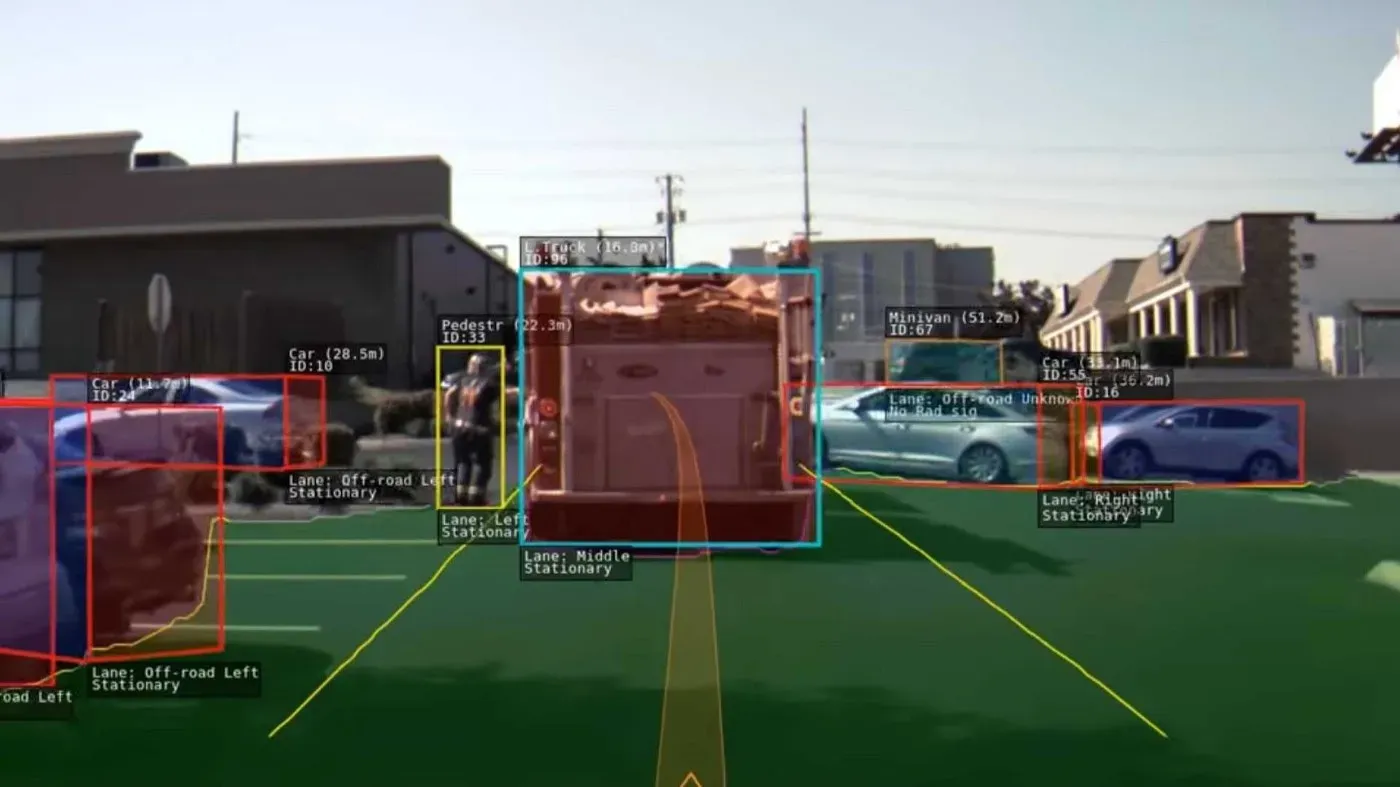

Take, for example, a computer vision system for self-driving cars. Sure, it might start with object detection using bounding boxes. But that’s not enough for real-world conditions. You’ll likely also need semantic segmentation for roads and obstacles, polygon annotation for pedestrians and vehicles, and OCR to detect and label street signs. To build a practical, production-ready model, you need a wide range of annotations.

That’s where Unitlab Automation becomes incredibly useful. You can define the types of image annotations you need and assign the right foundational or custom models to each. And you’re not limited to just one workflow—you can create as many as your project requires.

Then, within Unitlab Annotate, you can run your setup using Crop Auto-annotation and Batch Auto-annotation to label your dataset with your selected workflow.

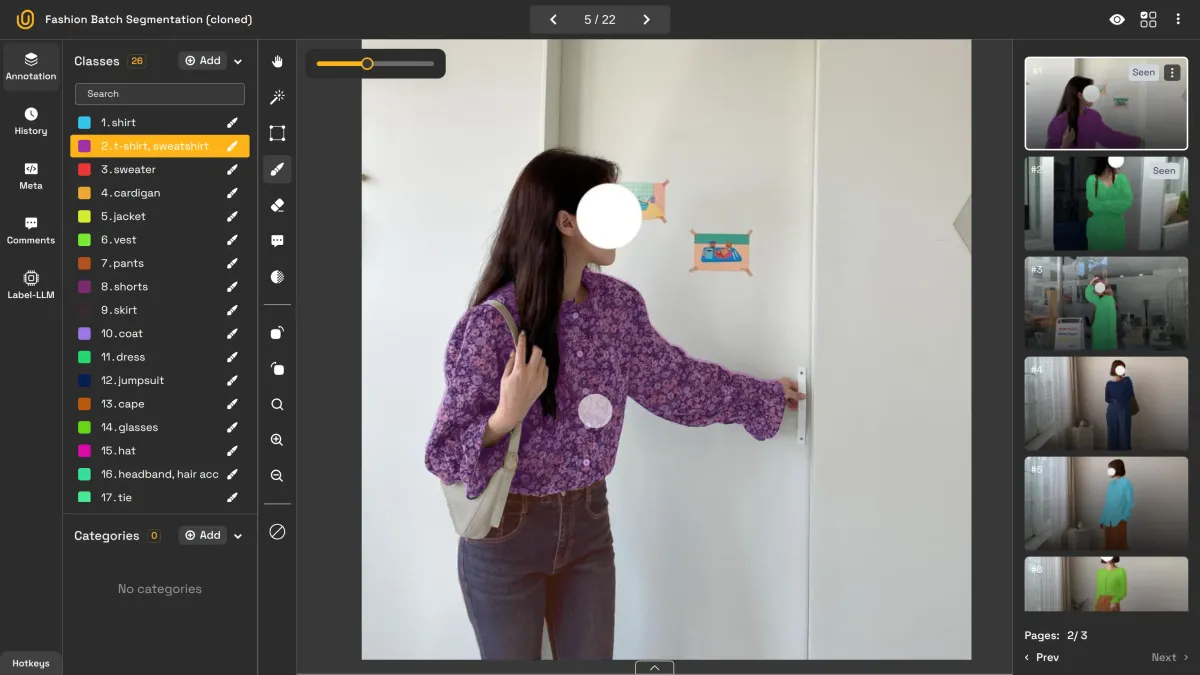

Crop Fashion Auto-annotation | Unitlab Annotate

AI Model Management

Consider this Rule of Defaults:

95% of users don't change a damn thing.

But if you want to get the most out of any tool, it’s worth tweaking the defaults. That goes for Unitlab Annotate too. While our default settings are sensible and work for most people most of the time, there are real benefits to customizing your setup.

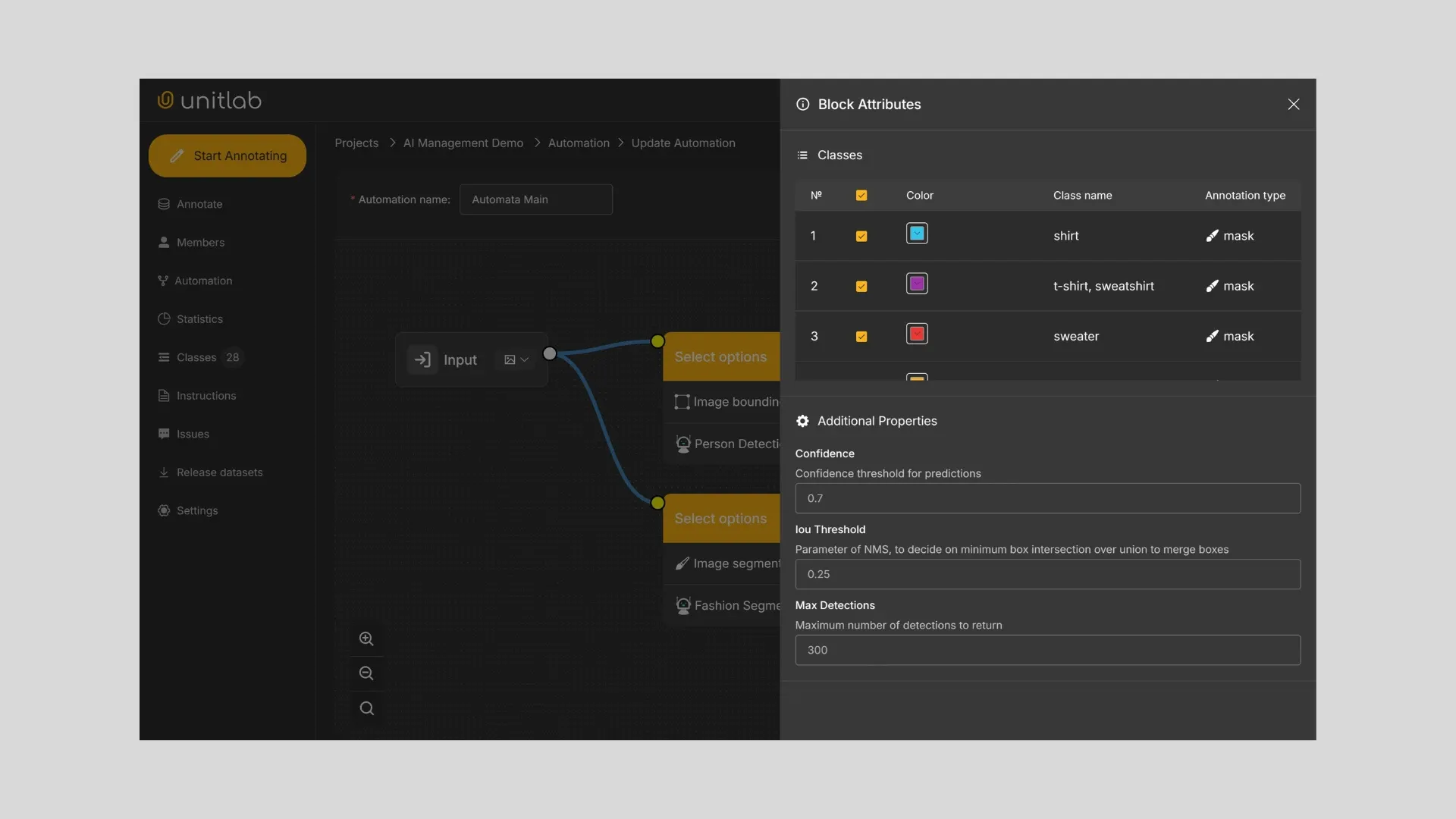

Foundational and BYO models come with default class names, label colors, and prediction settings. Usually, they just work. But sometimes, you'll want to rename classes, adjust label colors, or fine-tune thresholds—especially if you're working on a complex dataset or trying to avoid confusion.

To do that, go to Automation > Your flow, then click the vertical dots to edit settings.

Once opened, you’ll be able to adjust:

- Class Name: Change or translate the default class name to match your labeling needs.

- Color: Tweak label colors to improve clarity or stay consistent with your label map.

- Confidence: Set a minimum confidence threshold (e.g., 0.7 = 70%). Only predictions above this score will be kept. Raise it for higher accuracy.

- IoU Threshold: Set how much overlap is allowed between predicted boxes before one is discarded (e.g., 0.25 = 25%). Lower it if your model is detecting lots of overlapping objects.

- Max Detections: Limit how many detections per image. If set to 300, for example, only the top 300 predictions (by confidence) will be kept. Adjust this based on your image density.

Conclusion

AI auto-annotation models have come a long way. Today, they’re fast, accurate, and affordable. When you combine them with human review, you get results that neither AI nor humans could deliver alone.

With Automation Workflows and the ability to manage your own models, Unitlab Annotate gives you the tools to build the exact dataset your project needs. Whether you're speeding up labeling or customizing behavior, it's all about making annotation smarter, faster, and more flexible for your next complete AI/ML dataset.

Explore More

Want to go deeper into how automation and AI model management work inside Unitlab Annotate? Start here: