Data labeling has always been one of the biggest bottlenecks in building AI systems. It takes large amounts of time, money, and human effort to prepare quality AI/ML datasets at scale. As of now, no industry standard for labeling datasets exists. It is safe to say that data annotation is not solved yet, especially when it comes to addressing complex problems that require advanced automation and adaptability: challenges that agentic AI is designed to tackle.

The latest development is the introduction of agentic AI in the data labeling process, powered by foundational technologies like generative AI and large language models. Instead of treating data annotation as a manual, tedious task or a series of repetitive tasks, agentic AI can reason, plan, and act on its own with minimal oversight. It doesn’t just label source data; it decides how labeling should be done.

Agentic AI extends its capabilities to change data annotation by enabling autonomous decision-making and continuous learning. An agentic AI system coordinates multiple AI agents to manage complex data annotation processes with limited supervision.

In this post, we’ll explore an emerging standard: agentic AI for data annotation with Unitlab AI. By the end, you will learn:

- what agentic AI is

- why it matters for data annotation

- how agentic AI operates in the context of data annotation

- how Unitlab AI is building agentic workflows

- benefits, challenges, and the future of this approach

What is Agentic AI?

You’ve probably heard of AI agents. An AI agent is an autonomous software system that uses artificial intelligence to perform specific tasks on behalf of humans, often with minimal oversight.

While an AI agent executes particular functions, agentic AI refers to the broader system that coordinates multiple AI agents, sometimes called autonomous agents, each responsible for different roles within a larger framework. They have access to proprietary data within enterprises: hence the name “agent.”

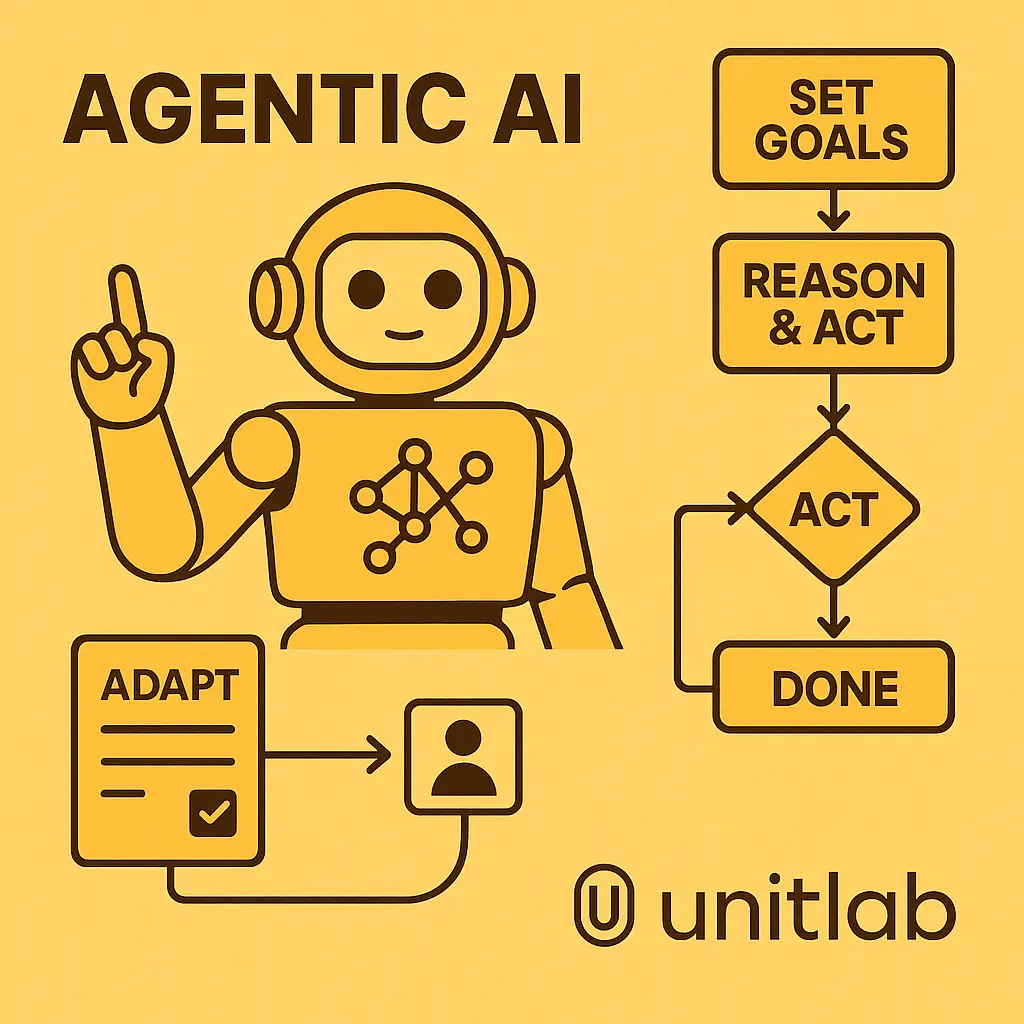

Agentic AI is an approach to building such agents. Instead of hard-coding paths (control logic) to the answer, this paradigm emphasizes giving AI agents the ability to function like digital workers: set goals, adapt strategies, and act flexibly instead of following programmed paths. These agentic AI systems can act autonomously to achieve their goals without direct human intervention. They reason and act, hence the paradigm ReAct.

As Karim et al. (2025) explains, agentic systems combine reasoning and decision-making with interaction capabilities. They’re not just task performers but planners that adjust based on context. Agentic AI relies on knowledge representation to organize and utilize information for autonomous reasoning and efficient decision-making.

In data annotation, this means agentic AI doesn’t only label source data. This AI decides which samples to prioritize, when to involve humans, and how to improve its labeling process over time.

Why Data Annotation Needs Agentic AI

The short answer: it is better than the other approaches currently available: manual and automatic annotation. Both manual and automatic annotation methods often require significant intervention, which agentic AI aims to minimize.

Manual labeling

Manual annotation by human data labelers is seen as the gold standard: humans can see the bigger picture, consider contextual nuances, and consult experts before labeling data.

However, this traditional approach has its fair share of challenges. The limitations come from inherent human speed and efficiency.

- Scaling: state-of-the-art models like GPT-5 require datasets with billions of tokens, while a medium human annotation team can generally process only thousands of instances per day.

- Costs: manual annotation is inherently costly and slow. Data labeling often exceeds 25% of total ML project budgets, while datasets take months or even years to produce iteratively.

- Human factors: humans are not naturally consistent. We are prone to cognitive biases and fatigue. Errors slip through, reducing label consistency to below 70% in a typical data labeling project.

Automatic labeling

This approach utilizes the programmatic nature of machines: program it once and run it everywhere. Programs are exceptionally fast: with an algorithm, you can label thousands of data points in a fraction of a second. Machine learning algorithms enable rapid labeling of large datasets, but may struggle with context-dependent nuances.

However, data annotation is heavily context-dependent. Each domain has its nuances and subjectivity that machines fail to capture. In other words, machines excel where humans fail, and vice versa.

This leads to a common question: why not combine both approaches in a way that maximizes quality and efficiency while minimizing costs and resources? In the words of Peter Thiel, the synergy between man and machine.

Agentic AI in Data Annotation

The ReAct framework includes several types of reasoning: chain-of-thought, tree-of-thought, human-in-the-loop, and others. Each has pros and cons, but all are autonomous, proactive systems that can work with minimal human input.

In data annotation, the human-in-the-loop approach is becoming more common. The idea is that AI agents can be trained to reason and act on their own. When unsure about the data, they flag it for human review.

The exact workings are technically complex, but the intuition is simple: combine the strengths of humans and machines in the optimal way. Agentic AI is particularly effective at managing complex workflows that involve multiple steps and decision points in data annotation.

Workflows

A high-level view of agentic data annotation workflows looks like this:

- Pre-labeling: AI agents generate first-pass labels using pre-trained models and can handle various data types: text, images, and audio.

- Decision-making: The system selects which data to auto-label, skip, or send to humans.

- Human-in-the-loop: Annotators handle edge cases and provide corrections.

- Continuous learning: The agent refines its strategies with every round of feedback.

The result is a system that gets faster and more accurate over time.

Benefits

This agentic AI paradigm brings concrete benefits:

- Mass labeling: handles large volumes of data consistently at acceptable quality.

- Uncertainty detection: flags data points where humans must decide (e.g., medical images).

- Feedback loops: learns from corrections and human insight, improving continuously.

This mechanism allows AI agents to become high-quality annotators like experienced humans, but much faster.

Unitlab AI’s Approach

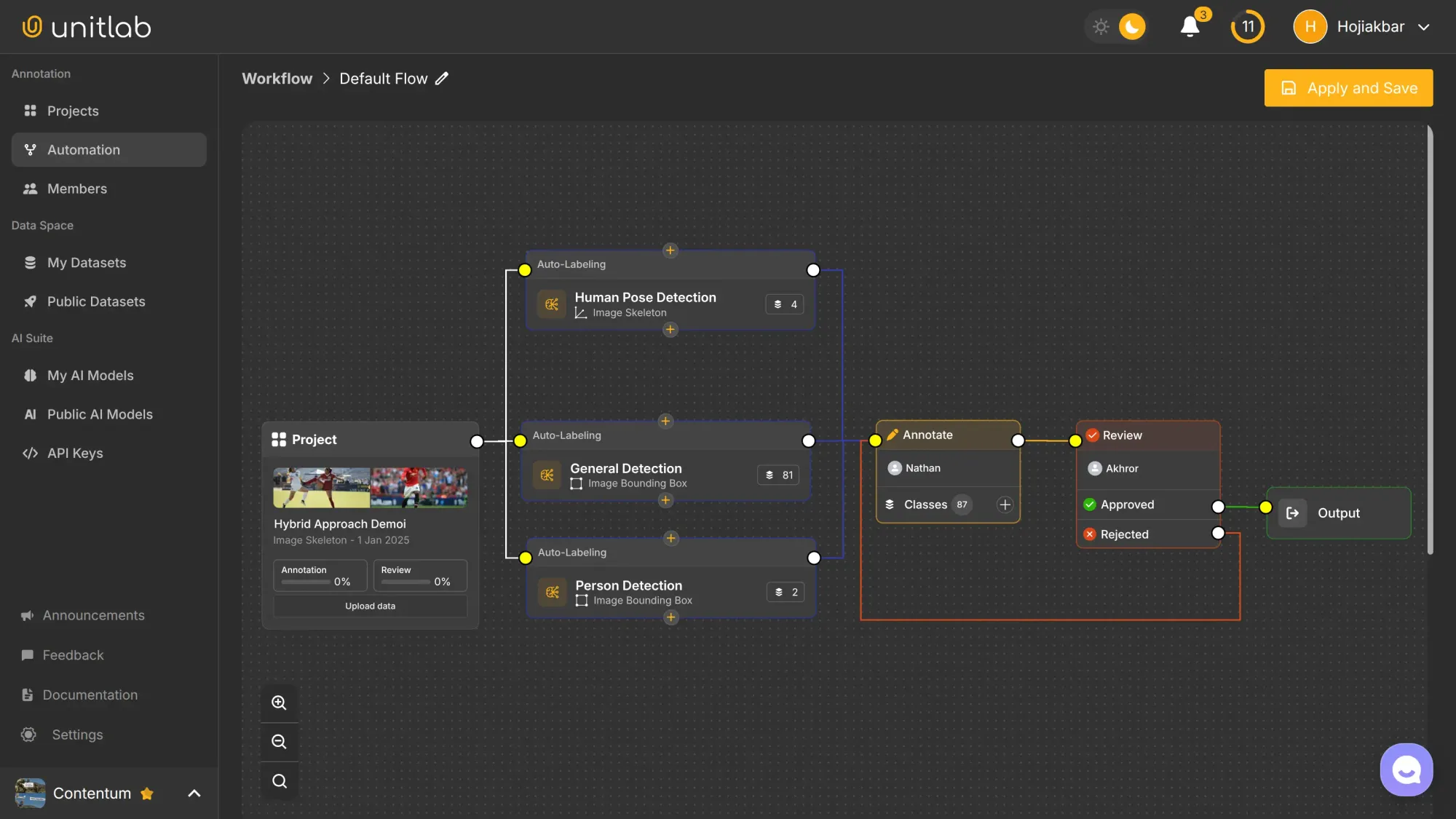

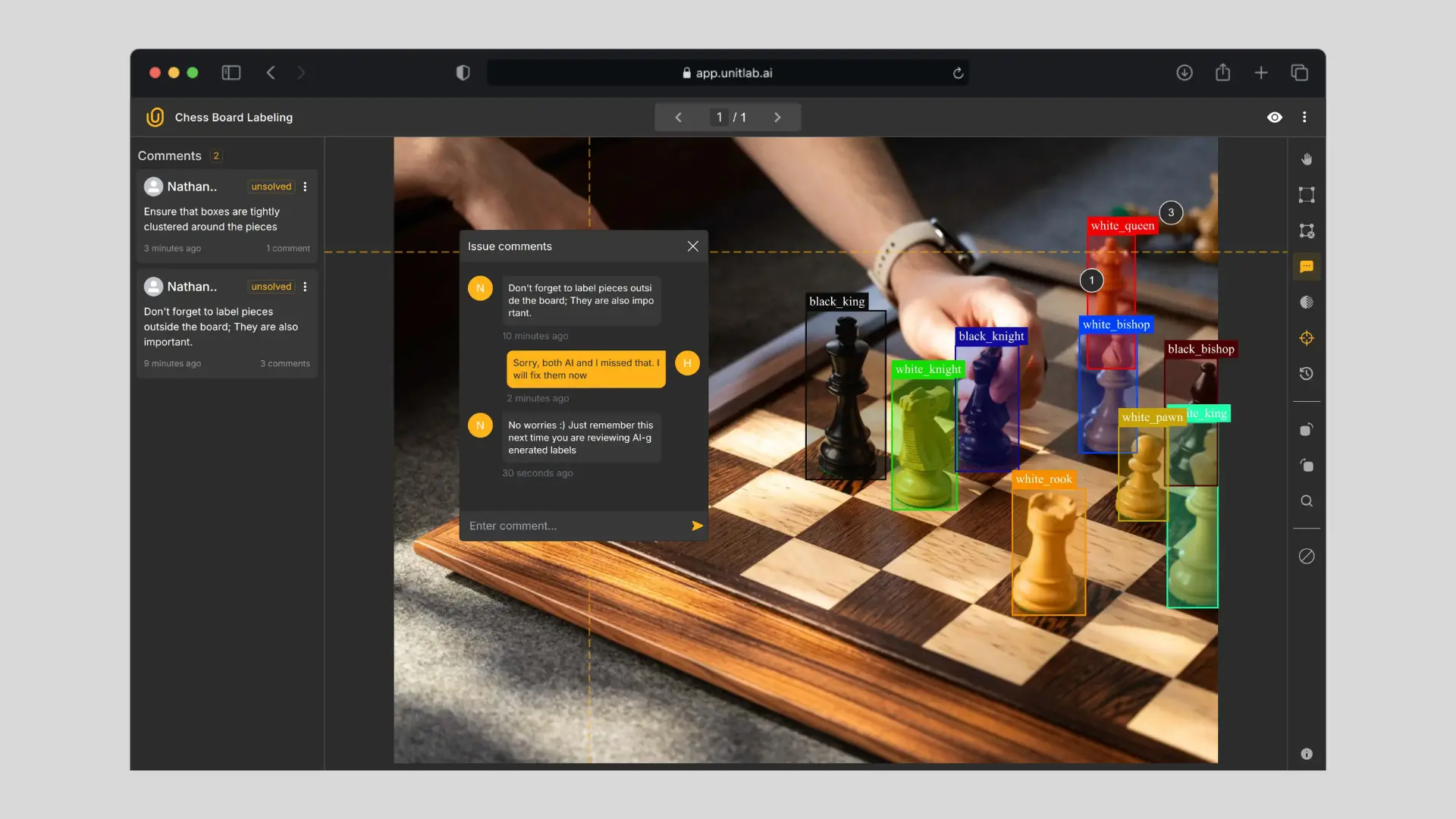

At Unitlab AI, the human-in-the-loop approach is already in practice, with further innovations underway. Unitlab AI provides a full-fledged, automated platform to set up AI agents and human-in-the-loop methods for data annotation:

- Model Management: Teams can connect their own models or use built-in ones for projects. This BYO (Bring Your Own) option provides workflow flexibility. Unitlab AI also allows integration with external systems to enhance data annotation workflows and leverage additional data sources. Learn more..

- Automation Workflow: Chain multiple models together to enrich annotations, and assign humans to review flagged cases. This workflow is fully customizable. Learn more.

- Human Collaboration: Unitlab Annotate enables annotators and reviewers to check flagged labels and communicate within the platform. Their work feeds back into the AI agents. Learn more.

This approach ensures quality, efficiency, and cost control. In fact, Unitlab Annotate’s agentic workflows can cut labeling time by 15x and costs by 5x. To put this into perspective, a data labeling task that takes 30 days and $10,000 with manual annotation can be completed in 2 days for $2,000 with Unitlab AI.

This combination of AI systems and human review makes Unitlab a practical example of agentic AI in action.

Benefits, Challenges, and Future

Benefits

The human-in-the-loop paradigm addresses the problems inherent in traditional labeling. We need high-quality AI/ML datasets to build AI/ML systems to use in the business setting. Our goal is to develop systems, not annotate data for the sake of annotating it.

This Agentic AI approach seems to be on its way of becoming the default, especially for larger data annotation projects.

By applying agentic AI, teams gain:

- Efficiency: Less time spent on obvious labels.

- Scalability: Handle millions of data points without linear human cost.

- Higher quality: AI detects patterns, humans focus on judgment-heavy cases.

- Cost reduction: 5x lower costs with Unitlab AI while maintaining quality.

Challenges and Considerations

However, this human-in-the-loop system is neither perfect nor easy. Anyone telling you that you can have it all is probably trying to sell something to you.

First of all, it takes time, money, and expertise to configure the system. The initial cost might be a big constraint for many small-scale teams.

Secondly, if your data labeling project is not at scale, why bother implementing this new paradigm? Manual labeling with some goodies from a data annotation platform like Unitlab AI is enough for most smaller projects. Use the right tool for the job; don't try to fit the task into the tool.

Additionally, you must address these problems along the way:

- Edge cases: Complex or subjective data labels still need humans.

- Bias: If AI Agents are biased from the first day, final annotations will reflect those biases.

- Domain specificity: What works for retail images does not work for medical scans. Obviously.

- Unstructured data: Unstructured data, such as emails and social media content, presents additional challenges for annotation and often requires advanced AI techniques.

These challenges should make you consider this new human-in-the-loop paradigm for data annotation. That said, when the project is right (large-scale, critical, and time-bound), then this Agentic AI approach is by far the best path you can take.

Future of Agentic Data Annotation

It is almost impossible at this point to predict the course of AI technologies due to innovations and their complex interactions with each other. However, we can expect a few trends in the near future:

- Multi-agent systems: multiple agents collaborating on tasks.

- Domain-specialized agents: tailored to industries like healthcare or finance.

- Real-time annotation: streaming data labeled instantly.

- Closer ties to MLOps: annotation integrated with training and deployment.

- Reinforcement learning: enabling agentic AI systems to adapt and improve through trial and error, optimizing decision-making and efficiency.

Agentic AI will increasingly shape how data is annotated, with humans still in the loop.

Conclusion

Data annotation has long been the bottleneck of AI development. Agentic AI changes that.

By making annotation more autonomous, strategic, and adaptive, agentic AI transforms labeling into a faster, cheaper, and smarter process.

Unitlab AI is building this future today. It is combining automation with human expertise to deliver scalable, high-quality annotations.

If you want to see how agentic annotation works in practice, Unitlab Annotate is a great place to start.

Explore More

Follow these articles to learn more on the topic:

- Low-Code and No-Code Tools for Data Annotation

- Unitlab Automation Workflow

- AI Model Management in Unitlab Annotate

References

- Md Monjurul Karim (Aug 2, 2025). Transforming Data Annotation with AI Agents: A Review of Architectures, Reasoning, Applications, and Impact. Future Internet 2025: Source

- Hojiakbar Barotov (Dec 31, 2024). How Unitlab AI Aims to Transform Data Annotation. Unitlab Blog: Source

![Agentic AI: Data Annotation with Unitlab AI [2025]](/content/images/size/w2000/2025/12/agentic.png)

![Text Annotation with Unitlab AI [2025]](/content/images/size/w360/2025/12/text.png)