Image labeling is the foundation of any computer vision AI/ML model.

As the first step in building your AI/ML pipeline, the importance of the image annotation process cannot be overstated. The quality of final AI/ML models closely mirrors the quality of the training data, also known as GIGO (Garbage In, Garbage Out).

It is essential that image labeling is fast, efficient, and accurate. You most likely need a robust infrastructure with a proper image labeling service at the center.

There are many third-party and open-source image labeling platforms to choose from for your computer vision project. With so many options available, as well as the fast-changing technologies in the AI/ML field, it is challenging to compare, choose, and commit to one image annotation tool for your project.

Especially, if you are going to undertake a large-scale initiative, the stakes will be high. The relative advantages and disadvantages of any data labeling platform are heavily context-dependent.

To address this recurring challenge, we’ve created this guide to help you consider the 11 most important factors in choosing a data annotation service that fits your needs. It is based on the requirements of your AI/ML project and the capabilities of the data annotation platform.

We will start with broad considerations of your requirements and move to specific features of the vendors that provide the image labeling platform.

1. What is Your Task?

The most important defining factor is your project. Your task dictates literally everything: from image annotation type to automation to pricing plans. Here is the key question to consider:

- What image annotation type does your project use?

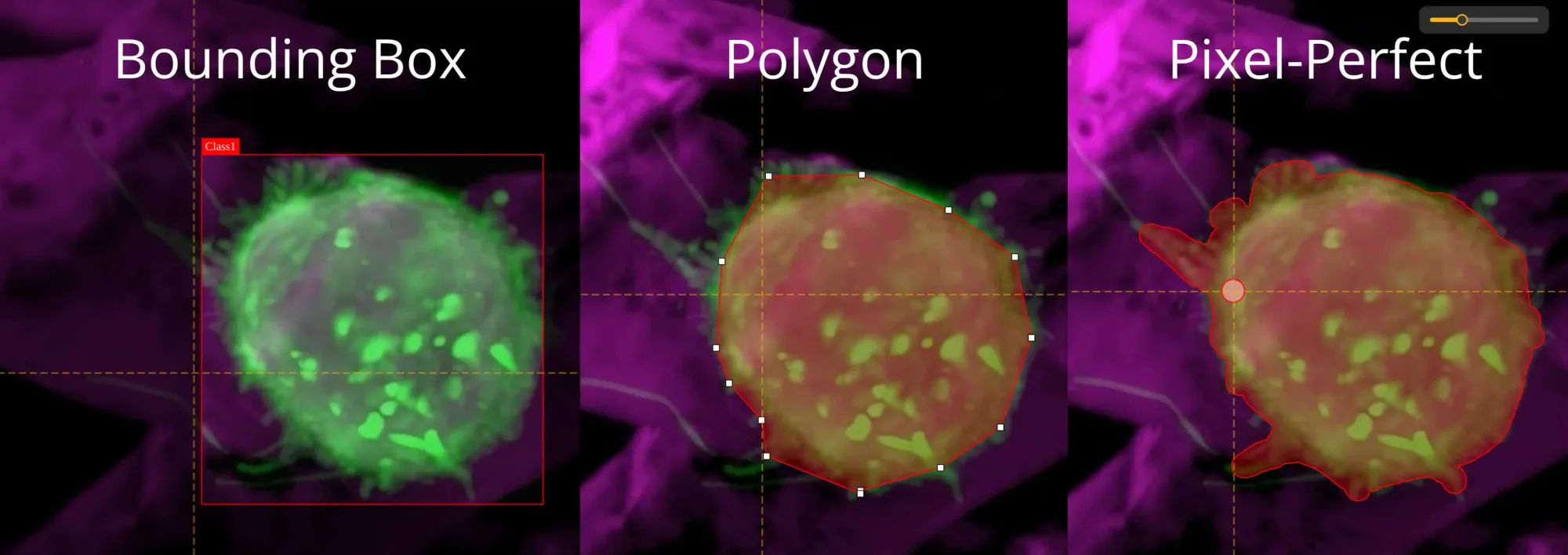

If your AI/ML dataset does not require much complexity or outstanding accuracy, you may choose traditional methods, such as bounding boxes and polygons, which most image labeling tools offer.

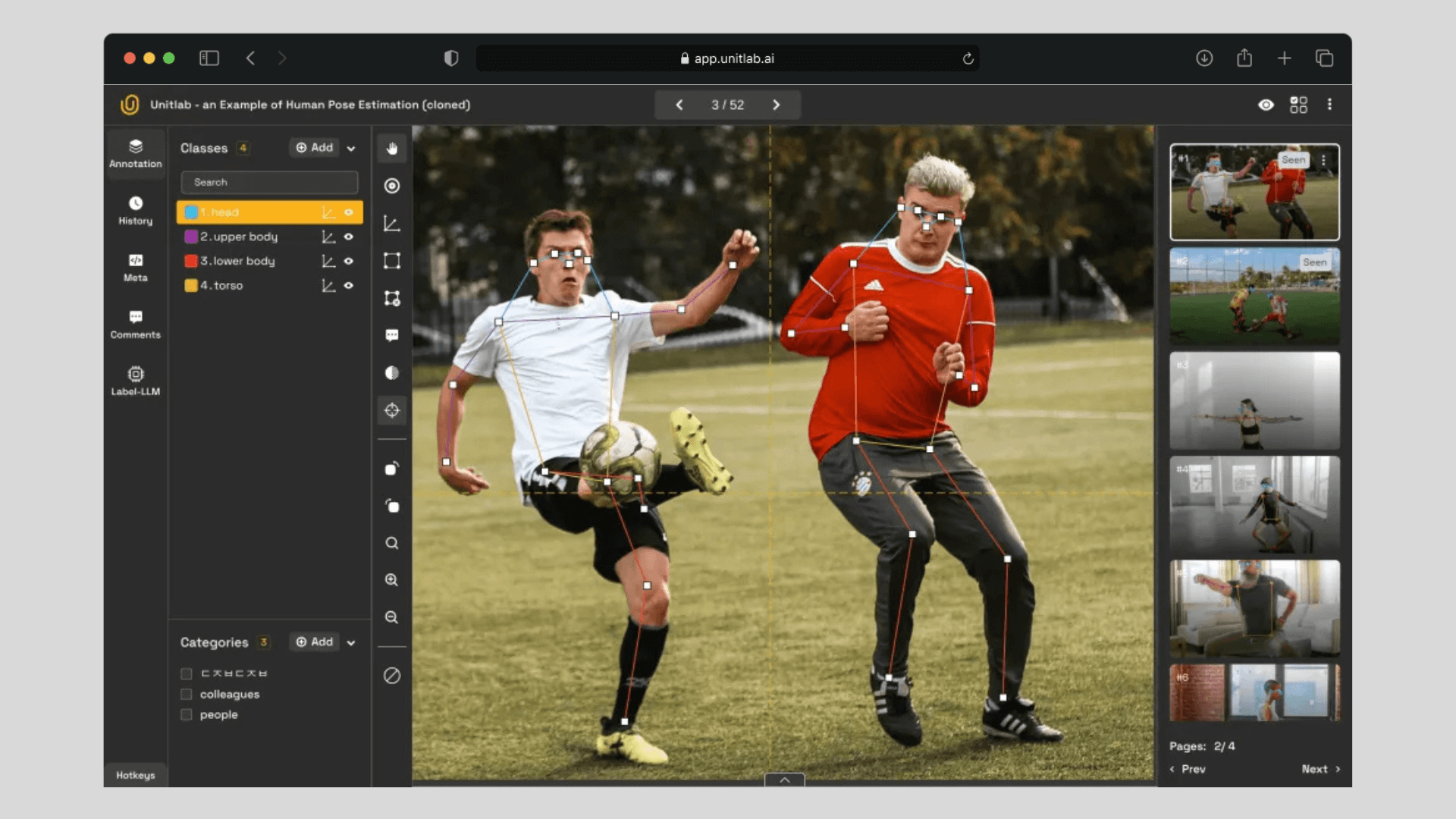

However, if your computer vision project operates in a specialized industry e.g., medical imaging, where highly precise and accurate image sets are required, you might need platforms that offer pixel-perfect segmentation and specialize in the field. For example, Unitlab Annotate is one of the few platforms that provide pixel-perfect segmentation.

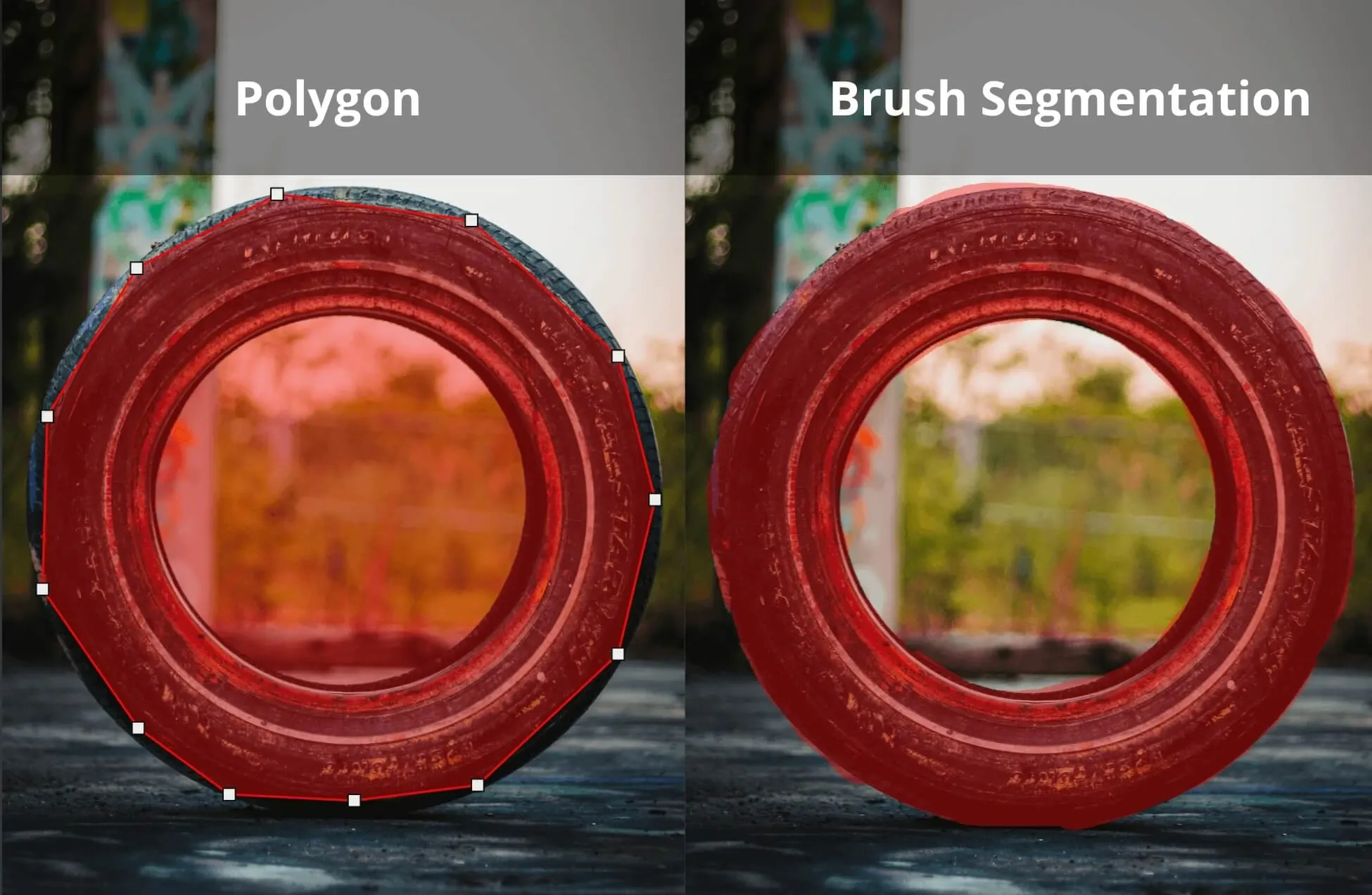

You would definitely prefer pixel-perfect segmentation to polygon for round or circular objects, for instance. There is no efficient way for annotating circular objects, like rings and tires, with traditional methods. In the image below, the polygon annotation includes the space inside tires, which is incorrect, while brush segmentation, a type of pixel-perfect annotation, doesn't.

Generally, the more advanced your project’s image annotation needs, the fewer suitable data annotation platforms will be available. For example, if your project requires 3D Lidar image annotation (an advanced method of labeling images with lasers) only a handful of platforms offer this technology.

Because this is the most important factor, it is worthwhile to explore the tools each potential platform offers. Almost all data annotation platforms provide demos; many also offer free plans. These resources can help you determine the best starting point for your project.

2. How Secure is the Platform?

Your project and training data are proprietary; the image labeling platform you choose must have established procedures to ensure essential security for your data. Industry-specific compliance requirements, such as GDPR or HIPAA, might apply.

Ensure the platform meets these standards. This point may seem overly cautious, but it can prevent major issues in the future.

3. Customer Support is Essential

Customer support is often overlooked during decision-making but becomes vital during the image labeling process. No matter how intuitive or great your chosen tool might be, you or your team will almost always rely on customer support occasionally.

To ensure the annotation process runs smoothly and stays on track during bottlenecks, the support team should be fast and competent enough to provide necessary assistance.

While user reviews and ratings are helpful, it’s more effective to request a demo or schedule a meeting to address specific questions directly with the vendor.

4. On-Premises Solutions

Some projects, particularly those dealing with sensitive or classified data, may require an on-premises solution. Ensure that the vendor offers on-premises deployment if your organization has strict security or compliance needs. Unitlab Annotate offers on-premises solutions and helps to set up your pipeline.

5. Insource or Outsource?

Now we come to the actual process of image annotation: who will annotate the image set? If you do not already have in-house image labelers, it might be costly to hire, train, and create a team of image annotators. Conversely, if you already have an experienced team, they can handle the annotations directly.

The common practice is to hire image annotators provided by the vendor platform. These annotators are typically more efficient because they are already accustomed to labeling images on the platform. For newcomers, outsourcing can also be cheaper since you will not need to invest in training and upscaling.

6. Assuring High Quality

The main purpose of using a data annotation platform is to streamline the process of creating high-quality datasets for your AI/ML projects. Thus, platforms must provide mechanisms to ensure quality. If you outsource the labeling workforce, the platform should allow you to check the quality of the output.

If you bring your own team, data annotation platforms should have robust mechanisms to maintain consistent, high-quality image sets. Unitlab Annotate, for example, provides these two roles for you to maintain the high quality of your image sets: Annotator and Reviewer.

Annotators label the images, while reviewers oversee the process to maintain consistency and quality. Check whether your potential platform provides similar features to assure high-quality datasets.

7. How Easy is It to Use?

Each image labeling platform is a tool in your pipeline. It must seamlessly integrate into your workflow and be easy for your team to use (if you have your own image annotation team).

The objective is to annotate training images accurately and efficiently, not to navigate a cumbersome platform of the data annotation vendor.

Intuitiveness is subjective, so it’s wise to test the platform with your team to determine which one is most user-friendly and facilitates rather than hinders progress.

8. Can We Automate It?

Automation in image annotation platforms can be split into two categories:

- Automating project management tasks.

- Leveraging AI-powered auto-annotation tools.

CLI, SDK, APIs

Many manual tasks can be automated: uploading and downloading data, monitoring, and project setup. Check whether the platform offers CLI, SDK, or APIs to build pipelines. Additionally, verify if the platform integrates well with your current tech stack and supports importing/exporting data efficiently.

Auto-Annotation

Advancements in AI-powered annotation models like COCO and YOLO have made it possible to annotate large datasets quickly with quite high accuracy. Indeed, it is possible to cut down on image labeling costs and automate the process at the same time.

AI models can automate the annotation of your source images in a short time, known as batch auto-annotation:

Batch Image OCR Annotation | Unitlab Annotate

There are some other approaches to automating the annotation, depending on the platform. Human annotators can use different models and approaches depending on the task. Then, human image annotators can review and make changes to the labeled images. Often, this hybrid approach combining auto-annotation with human review yields optimal results.

9. Bringing Our Own Models

There are many open-source models that you can use to auto-annotate your images beyond what is provided by the platform. Alternatively, you may have built your own custom AI model for your own use cases to annotate your source images due to sensitivity and/or special use cases.

If this is the case, it is essential that the platform should allow you to bring your models for seamless integration. This way, you can get the best of both worlds: a visual data annotation platform to streamline your process and your own bespoke AI/ML models for your own use cases. As usual, consult with your potential platforms.

10. Project & Dataset Management

Managing multiple projects and datasets on a large-scale annotation project is challenging. Project management refers to the mechanisms to manage your project efficiently: clear user roles (Manager, Reviewer, Annotator), detailed project and member statistics, and quality assure mechanisms.

This ensures that your project stays consistent and on track.

Dataset management, by comparison, is the process of releasing datasets for the next phase of AI/ML building, keeping different versions of your datasets (version control), and building dataset repositories.

Efficient dataset management is essential because your AI/ML models need new training data, you may want to check the precision of your models with different image sets, so you need to manage your datasets efficiently with version control, similar to git in software engineering.

11. Pricing Plans

Depending on your budget for the image annotation phase, this factor can be the most or least important one. If you’re working with a fixed budget, narrow your options to platforms within your range. However, remember that price often correlates with features. There is an inherent trade-off here between the price and features.

Alternatively, you can take into account the 10 factors above and then compare the pricing plans of a few particular data annotation platforms for your data labeling phase. You can get the ideal vendor, but your operating budget can be higher than expected.

With that said, advancements in computer vision and increased competition among data annotation platforms have made pricing plans more accessible in recent years.

Conclusion

Every computer vision AI/ML project needs a robust data annotation platform to streamline the image labeling process. The selection of a particular data annotation platform depends on many factors with differing weights.

Although we cannot provide a formula with weights to choose, because every project has inherent unique characteristics, we can provide 11 questions to direct you towards making an informed and calculated decision:

- What image annotation type does your project require?

- How secure is the potential vendor?

- Is the customer support adequate?

- Do you need on-premises solutions? If yes, does the vendor provide them?

- Will you outsource the labeling workforce?

- Can you assure high-quality datasets within this vendor platform?

- How easy is the image labeling platform to use?

- Can you automate processes?

- Can you bring your own models if necessary?

- Are project & dataset management adequate?

- Is the pricing plan within your budget?

This analysis might seem overly detailed or cautious. However, selecting the right vendor upfront can save significant costs and prevent major bottlenecks if you find yourself needing to switch platforms later.

Taking the time to thoroughly research and compare your options ensures clarity and confidence in your final choice, setting the foundation for a successful project.

That said, it’s crucial to test the prospective image labeling platform before committing to it for your AI/ML project. Take advantage of free plans if they are offered, request demos, or schedule calls with the vendor to address any specific questions you may have.

This hands-on approach ensures the platform meets your needs and expectations.